Notes on Statistical Mechanics

Epiphany Term

1 Statistical Mechanics

In Michaelmas, you introduced Statistical Mechanics and make the connection with Thermodynamics in the Microcanonical Ensemble. In this section we will carry on introducing the:

-

•

Canonical Ensemble with fixed , , and

-

•

Grand Canonical Ensemble with fixed , , and

Considering these will not only allow us to study systems which are closer to the real world. They will allow us to make the connection between Statistical Mechanics and thermodynamics even more robust. The Canonical and Grand Canonical Ensembles will naturally bring us to the discussion of the Partition Function, an object of fundamental importance, linking thermodynamics with the microscopic properties of physical systems.

1.1 Canonical Ensemble

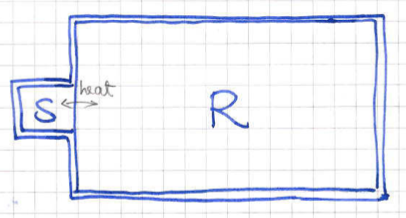

The canonical ensemble concerns systems which are held at fixed temperature , volume and particle number . This is precisely what we need if we want to study a system inside a box with impenetrable walls, only allowing the exchange of heat with its environment. To model this more precisely, image a system of particles inside a definite volume . We will now bring this system in thermal contact with a much larger system which will play the role of an energy reservoir.

In this setup, at any given time we can define the energy the of our system and the energy of the reservoir11 1 Strictly speaking this is not entirely correct as the two systems are assumed to be interacting. However, we assume that the interaction between them is infinitesimally small and we have to wait for a very long time for them to find equilibrium.. Since the system is interacting, each one of them is not separately conserved but as whole, the system can be thought of as an isolated system and therefore the total energy

| (1) |

is conserved and fixed. Moreover, with the system being large, we know that small small changes in will not alter the temperature of the reservoir, due to its large heat capacity . In thermodynamic equilibrium we will have the the temperature of will be equal to that of the reservoir and

| (2) |

1.1.1 The Quantum Canonical Ensemble

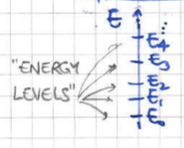

For simplicity, we will consider the case where both systems are quantum mechanical and whose microstates form a discrete set (they are quantised) as in figure 2. In general, for each energy level we can have different microstates22 2 This is essentially the degeneracy of the Hamiltonian operator.. This suggests that we can denote the microstates by with and for each one the corresponding energy would be . To keep our notation simple we will simply use the energy level index i.e. .

Since is not fixed, the natural question to ask is “What is the probability for the system to be in a given microstate of energy ?”. In order to answer this, we will exploit the fact that the total system is in the microcanonical ensemble. According to Boltzmann’s of equal a priori probabilities for , all states of with total energy are of equal probability. The task is then to count how many microstates of the total system are compatible having total energy for any given microstate of .

We will call the number of microstates of the reservoir corresponding to energy . Correspondingly, we will call the number of microstates of corresponding to energy . If we fix a particular state of , we can then see that we have a total of compatible microstates for the total system with the total system having fixed energy . From the microcanonical ensemble we now recall the Boltzmann entropy

| (3) |

for the reservoir . Moreover using the fact that , we can perform a Taylor expansion to approximate,

| (4) |

after using the definition of temperature

| (5) |

We can now estimate the number of states we are after according to,

| (6) |

where we defined . At this point we note that the number only depends on the properties of the reservoir which is independent of the microstate we are interested. In other words, it is just a constant independent of .

Boltzamann’s principle of equal a priori probabilities (applied to ) gives the Boltzmann distribution,

| (7) |

where we have defined the temperature dependent normalisation constant

| (8) |

also known as the Partition Function with the sum being over all microstates of . Notice that all dependence on the reservoir has dropped out, or more precisely it is encoded in the temperature which is also the temperature of .

Some important remarks about the partition function are

-

•

The partition function is defined as a simple normalisation factor in the Boltzmann distribution. However it will turn out to be the most fundamental object in Statistical Mechanics.

-

•

The partition function is a function of , and .

-

•

The Boltzmann weight favors microstates whose energy is .

Finally, we would like to comment on the case of combining two independent systems and held at tempterature with Hamiltonians and . The total Hamiltonian is with no interaction term between the two systems. In this case, the total energy is and the partition function of the combined system is given by summing over the microstates and ,

| (9) |

Therefore, for combinations of non-interacting systems the partition function of the total system is equal to the product of the partition functions of its sub-systems.

1.1.2 The Two State System

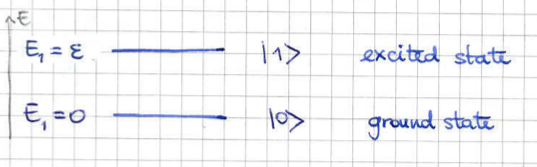

As an example, we will consider a quantum system which only has two possible microstates, corresponding to (the ground state ) and (the excited state ) with corresponding energies and .

For this particle system the partition function (8) is

| (10) |

The probabilities (7) of being in either state are

| (11) |

This allows us to compute the expectation value for the energy according to

| (12) |

If we now consider distinguishable33 3 The significance of distinguishabiliy or non-distinguiability of patricles will be discussed later in detail later in the course. copies of the two state system, the partition function is

| (13) |

For the combined system, the possible microstates are labelled by an dimensional array with for . The corresponding energy for will then be

| (14) |

where is the number of ’s which are equal to one. The corresponding probability is give by

| (15) |

To compute the expectation value of the energy we have to sum over all possible vectors ,

| (16) |

One can now make the following remarks

-

•

The fact that even for a small number of particles is related to the fact that we dealing with a non-interacting system and that energy is an extensive quantity.

-

•

In the fifth line of the above derivation we noticed that the expectation value is related to the derivative of the logarithm of the partition function. This is not a coincidence particular to the case under consideration but a general statement we will later prove.

1.1.3 The Classical Canonical Ensemble (Distinguishable Particles)

As we saw in the quantum case of section 1.1.1, the first step is to count how many microstates of the reservoir are compatible with the total energy condition

| (17) |

given a specific microstate of . Therefore, the basic logic in counting states in the classical version of the canonical ensemble is identical the quantum one. As you have already seen in the microcanonical ensemble, the number of classical states can be found by computing the area of the phase space hypersurface

| (18) |

The number of states then is given by

| (19) |

where is the phase space area per classical state and has dimensions . This is a very awkward step that we will derive it later in the term when we derive the classical partition from the quantum one. There we will show that the mysterious constant is nothing but Planck’s constant.The number is the number of degrees of freedom of our phase system. Another important point is that formula (18) assumes that the particles are distinguishable. This is perfectly fine with the classical laws of physics as we can attach labels to each particle and keep track of them during their whole time evolution. However, we will see in a later section that this is going to be problematic in the context of statistical mechanics.

After these remarks, we can follow all the steps that we of the quantum case to show that the probability density of a classical microstate of labelled by position coordinates and momentum coordinates to occur is,

| (20) |

Once again, we have discovered the Boltzmann distribution. The dimensionless partition function in the classical case is

| (21) |

Note that when we write the above formula, we are summing over all inequivalent particle configurations. The above is correct as long as the particles are distinguishable.

The Boltzmann distribution (20) can be used to compute the probability for the system to be in a microstate in . In our conventions we have

| (22) |

1.1.4 Energy And Fluctuations

Given our current understanding, we can now link the microscopic description of our system to thermodynamic quantities concerning the macroscopic world. This time to make the discussion simpler we will start from the classical case. Given a physical quantity , it is meaningful to consider its expectation value,

| (23) |

In the case of the microcanonical ensemble, the total energy of the system is fixed. However, in the case of the macrocanonical ensemble we keep the temperature fixed. Therefore, since the energy fluctuates, it makes perfect sense to consider its expectation value by computing through equation (23). Using the Boltzmann distribution (20) we now see that

| (24) |

where in the third line we used the definition (21). This is one of the profound results of statistical mechanics linking thermodynamic quantities to the Hamiltonian of a system via the partition function .

In order to prove the same result for a quantum mechanical system need to consider the discrete version of Boltzmann’s distribution (7) to write

| (25) |

This time we used the quantum version of the definition of the partition function in equation (8).

Another statistical quantity we would like to consider is the variance

| (26) |

For any integer we can write,

| (27) |

Setting we have,

| (28) |

Similarly to the case of the microcanonical ensemble, we can define the heat capacity

| (29) |

After using the chain rule we have the revealing relation,

| (30) |

or equivalently

| (31) |

This equation relates a statistical quantity describing the microscopic fluctuations of the energy to the heat capacity, a thermodynamic one that can be measured macroscopically. This relation is an example of a much more general result known as the fluctuation - dissipation theorem.

With the energy being an extensive quantity, we can argue that in the thermodynamic limit with we must have and therefore since temperature is an intensive quantity. Equation (31) then suggests that and

| (32) |

as a consequence of the central limit theorem. This shows that in the thermodynamic limit the canonical and microcanonical ensembles coincide.

1.1.5 Entropy

In the case of the microcanonical ensemble the entropy is given by Boltzmann’s formula

| (33) |

in terms of the number of inequivalent microstates of energy .

We can now try follow Gibbs’ argument in order to understand entropy in way that would be applicable to the case of the canonical ensemble. We consider a large number of indistinguishable copies of our system. We now want to count the inequivalent ways of partitioning our systems in systems in the state , systems in the state and so on so that . The number of inequivalent partitions is given by the multinomial coefficient,

| (34) |

When we consider a large number of systems systems obeying a particular probability distribution , we expect that they will be distributed according to . The total entropy is then,

| (35) |

where we used Stirling’s approximating formula. From the above we see that to each copy of our system we can associate an entropy

| (36) |

We have arrived at a very general formula which depends solely on the distribution of the ensemble. As we will see later, one can arrive arrive at the probability distributions of both the microcanonical and the canonical ensembles by simply demanding the extremisation of the Gibbs entropy (1.1.5).

We will now apply the entropy formula (1.1.5) for the two ensembles we have considered so far.

-

•

The Microcanonical Ensemble:

We consider a system at fixed energy . The probability distribution is such that

(37) In order to apply (1.1.5) we need to sum over all states

(38) which agree with the Boltzmann entropy for the microcanonical ensemble as we would expect.

-

•

The Canonical Ensemble:

For the canonical ensemble we will use the Boltzmann distribution (7) at a fixed temperature . Summing over all microstates we have

(39) In the fourth line we used once again equation (7) and the definition of the partition function (8). In the sixth line we used the fact that the energy average can be found from (1.1.4). Once again, we see that the partition function is much more than just a normalisation constant in the Boltzmann distribution.

1.1.6 Transgression: Entropy Extremising Distributions

One of the most fundamental ideas in this course is that the thermodynamic entropy is given by the number of inequivalent occurrences of a system after having fixed a probability distribution. We have seen that the principle of a priori equal probabilities of microstastes in Statistical Mechanics fixes those probability distributions in the microcanonical and canonical ensembles.

We now want to reverse the question. Suppose that we take the definition of entropy (1.1.5) for granted. The question we want to ask is how would then the principles of thermodynamics on entropy fix the probability distributions for us? We will pretend that we don’t know what the microstate probabilities are and that all we know is that they should be such that the entropy (1.1.5) is maximised and that they should satisfy constraints compatible with the macroscopic/thermodynamic properties of the system.

The task for each ensemble then is to extremise the Gibbs entropy with respect to under the constraint,

| (40) |

and

-

•

For the microcanonical ensemble with fixed energy , we need to set unless .

-

•

For the canonical ensemble we would need to fix the temperature. Instead of that we can equivalently demand that we fix the average energy

(41)

As we know, in order to extremise a function under certain constraints we need to introduce Lagrange multipliers. For the case of the microcanonical ensemble we introduce the function,

| (42) |

where is the Lagrange multiplier enforcing the constraint (40). After introducing the Lagrange multiplier we can now extremise the function by demanding,

| (43) |

which does not depend on the state, as long as the state satisfies the macroscopic constraint . We therefore see that extremisation of the entropy is equivalent to Boltzmann’s principle of a priori equal probabilities. In order to fix the constant that appears in the expression for the probabilities,we now examine the constraint,

| (46) | ||||

| (49) | ||||

| (50) | ||||

From the above we recover our familiar

| (51) |

We will leave the case of the microcanonical ensemble as an exercise.

1.1.7 Most Likely vs Average Energy

In this subsection we want to discuss the probability for energy levels instead of specific microstates within the canonical ensemble. Given that all microstates of energy have the same probability, we have

| (54) | ||||

| (55) |

After using the Boltzmann entropy for the energy level we have

| (56) |

Correspondingly, the partition function can be written as

| (57) |

where we used the definition of the Boltzmann entropy associated to each of the energy levels .

The most probable energy level is specified by minimising with respect to . This gives us

| (58) |

Inverting the last equation then fixes . In the third line we used the definition of temperature associated to the -th energy level according to the microcanonical ensemble. This is of course in general different from the temperature that we hold our system in the canonical ensemble. However, we see that the most likely energy level is the one whose microcanonical temperature is equal to .

The important point of this computation is that the free energy,

| (59) |

is minimised for a macroscopic system at fixed , and . We will see much more about this quantity from a thermodynamics point of view in the next subsection. We can see that this is achieved through a competition between,

-

•

Minimising energy (”energy minimisation”) which wins at low ,

-

•

Maximising degeneracy through (”entropy maximisation”) which wins at high .

In the thermodynamic limit with , the partition function (1.1.7) is determined by the largest term which is also the term with . We therefore have,

| (60) |

At this point, it is worth using the above thermodynamic limit approximation to compute the average energy,

| (61) |

In the penultimate line, the bracket equal to zero due to equation (1.1.7) that satisfies. We therefore see that in the thermodynamic limit:

-

•

The average energy is also equal to the most likely energy level to occur.

-

•

The ratio goes to zero.

Another interesting quantity we can compute in the thermodynamic limit is the entropy (• ‣ 1.1.5). We can write

| (62) |

where we used the expression (1.1.4) for the average energy . We therefore see that in the limit we are interested in, the Gibbs entropy of the canonical distribution coincides with the Boltzmann entropy of the most likely energy level.

1.1.8 The Free Energy

In the canonical ensemble we define the free energy to be,

| (63) |

which by construction is a function of , and . More generally, independently of the thermodynamic limit we can use equation (• ‣ 1.1.5) to write

| (64) |

The free energy (63) then reads

| (65) |

and we see another instance where the partition function fixes a macroscopic quantity. Notice that we can invert this relation to express the partition function in terms of the free energy of the system,

| (66) |

One might wonder why is the free energy (63) important? In the previous subsection 1.1.7 we saw that in the thermodynamic limit, the energy level that extremises this quantity is the most likely one. From a thermodynamics point of view, it is the appropriate thermodynamic potential when considering systems at fixed temperature, volume and particle number.

To see this, notice that the Gibbs entropy (• ‣ 1.1.5) simply a partial derivative of the free energy with respect to temperature,

| (67) |

Apart from this, the First law of thermodynamics,

| (68) |

where we have included the definition of the chemical potential as the change in energy when we increase the number of particles at fixed entropy and volume. This quantity will become very important to us later when we discuss the grand canonical ensemble. The first law then lets us write the differential of the free energy as,

| (69) |

from which we can clearly see that it is a function of , and and therefore the most natural thermodynamic potential when these variables are fixed. Moreover, we can see that in addition to the entropy, we can extract the pressure and the chemical potential from the partial derivatives with respect to volume and particle number,

| (70) |

As we already know, this is a general property of Legendre transformations. We started from the energy which has to be considered as a function of entropy , volume and particle number . In this language, the temperature is the derivative of energy with respect to entropy. The free energy (63) is simply the Legendre transformation which trades for which is the partial derivative of with respect to .

1.2 The Grand Canonical Ensemble

When we moved from the microcanonical ensemble to the canonical, we dropped the idea of an energetically closed system. More specifically, we brought the system in thermal contact with a reservoir and thermodynamic equilibrium implied that the two systems would have to have the temperature .

We will do something very similar in the case of the grand canonical ensemble with the extra complication that and can also exchange particles. In parallel with the case of energy, the system can have particle number and the reservoir can have particle number . Even though and are not separately conserved, the total particle number is. In order to describe equilibrium in this case, it will be useful to introduce the notion of chemical potential.

1.2.1 The Chemical Potential

Suppose that we bring in thermal contact two systems and which are also allowed to exchange particles. The total system can be considered to be in the microcanonical ensemble with fixed total energy , particle number and volume.

Since and are allowed to fluctuate, a natural question to ask is what is the most likely partition of energy and particle number between the two systems. In order to do this, we need to ask how many microstates does the total system have that would be compatible with this partition. This is a simple question in combinatorics and the answer is

| (71) |

where is the number of microstates of the system at energy and particle number . Alternatively, we can express this in terms of the Boltzmann entropies of the two systems according to

| (72) |

The principle of equal a priori probabilities would then tell us that the most likely partition would be the one that maximises under the constraints of fixed total energy and particle number.

In the case of only thermal contact, these considerations brought you to the discussion of temperature and the zeroth law of thermodynamics. This came about by extremising with respect to the partition of energy. In the present case, we will have to extremise with respect to both energy and particle number while retaining the constraints. We can write,

| (73) |

and then extremise the exponent with respect to and . Doing so, we obtain

| (76) | ||||

| (79) | ||||

| (82) | ||||

The first line of the above system of equations is nothing but the zeroth law of thermodynamics after identifying

| (83) |

The second equation in (76) is telling us that in thermodynamic equilibrium, apart from , there is another intensive quantity which is equal between the two systems which are in contact. This is the chemical potential defined through,

| (84) |

We see that every time we allow one of the extensive quantities to not be conserved in our system, there is a corresponding intensive one that stays fixed in thermodynamic equilibrium. In the case of the grand canonical ensemble, the quantities that we are going to keep fixed are the temperature , the volume and the chemical potential .

1.2.2 The Grand Canonical Partition Function

The discussion for the case of the grand canonical ensemble is in parallel with the philosophy we followed in section 1.1 for the canonical ensemble. The only difference is that now we will also allow the particle numbers and to vary while .

Once again, we want to ask what is the probability for a specific microstate of to occur consisting of particles and energy . Just like in the case of the canonical ensemble, the total number of compatible microstates of are . Once again, using the definition of Boltzmann entropy we have,

| (85) |

We will now follow the same steps as in the canonical ensemble and expand

| (86) |

In the last line we used the definition of the chemical potential in equation (84). The principle of a priori equal probabilities and equation (85) then yield,

| (87) |

with a constant of normalisation for the probability distribution. The constant is then

| (88) |

which is also known as the grand canonical partition function. As we will see later, the grand canonical partition function fixes all the macroscopic quantities we might wish to extract. This is in parallel to the partition function (8) in the case of the canonical ensemble.

Up to now we have been using notation which is appropriate for a quantum mechanical system. For a classical system, the concepts we have used in our derivation remain the same. More specifically, the microstates are going to be labelled by the particle number which is not fixed and the positions and momenta of our particles 44 4 In the notation of this subsection, and can be vectors in a dimensional space. with . The energy of the microstate is then given in terms of the N-body Hamiltonian according to . The analog of the distribution (87) is the density

| (89) |

For the case of distinguishable particles the classical grand canonical partition function is,

| (90) |

where is the -body phase space and the corresponding degrees of freedom.

As an aside, we note that the grand canonical probability distribution (87) can be derived by following the ideas of section 1.1.6 around the Gibbs entropy defined in equation (1.1.5). For the case of the grand canonical ensemble, the appropriate constraints are,

The first line makes sure that the collection of number do indeed correspond constitute a probability distribution. The second and the third constraints are equivalent to fixing the temperature and the chemical potential of the system.

1.2.3 Thermodynamics at fixed and

In this subsection we could follow the logic of subsections 1.1.4, 1.1.5 and 1.1.8 in order to relate interesting thermodynamic quantities to the grand canonical partition function of equation (87). In the grand canonical ensemble we keep fixed , and . From the point of view of thermodynamics, we know that energy can be considered as a function of entropy , volume and particle number . As we already know, the first law of thermodynamics reads,

| (91) |

and we note that

| (92) |

The above implies that the natural potential to consider at fixed and is the double Legendre transformation,

| (93) |

which is known as the grand canonical potential. To see that only depends on , and we compute the differential,

| (94) |

which in combination with the first law (91) gives,

| (95) |

From the above we read off,

| (96) |

as we might had expected from an appropriate thermodynamic potential.

1.2.4 Thermodynamics from Statistical Mechanics

Given that we know the probability distribution of microstates, it is natural to identify and in order to make the connection between statistics and thermodynamics. The least obvious thermodynamic quantity to identify is the entropy which is conjectured to be given by the Gibbs entropy (1.1.5). Ultimately, we would like to obtain the grand canonical potential from a microscopic description.

We start by examining the Gibbs entropy (1.1.5) for which we can write,

| (97) |

In the step from the fourth to the fifth line we used equation (88). This result is very analogous to equation (• ‣ 1.1.5) of the canonical ensemble. Of course, in the present case, we have the grand canonical partition function appearing instead.

Notice that the third line in the derivation of (1.2.4) can be written in an alternative way which is suggestive. After using the form of the grand canonical probability distribution (87) we can write,

| (98) |

from where we can read off the relation,

| (99) |

Combining this result along with (1.2.4) we just recover,

| (100) |

which is the first of the thermodynamics relations in (96).

1.2.5 Statistical Fluctuations

In this subsection we would like to see the convergence properties of the grand canonical distribution in the thermodynamics limit where with and fixed. In this limit we expect that the extensive quantities we do not fix will scale like the volume which is the only extensive quantity that we hold fix. This argument constraints the large volume behaviour of average energy and particle number according to,

| (103) |

where and are only functions of and .

A good measure of the statistical fluctuations in our system is provided by the variance

| (104) |

In the previous subsection we managed to express the averages of energy and particle number in terms of the grand canonical partition function . Here, we will need to consider the higher moments and for any positive integer . Starting from the particle number we have,

| (105) |

In order to obtain a similar result for the energy, we define the linear differential operator

| (106) |

This allows us to write

| (107) |

After these results, we can now go back to the discussion of the variances (1.2.5). In order to compute the particle number variance, we will use equation (1.2.5) for to obtain,

| (108) |

Similarly for the energy variance in (1.2.5) we obtain,

| (109) |

In the first and the third lines we used equation (1.2.5) for .

Now that we have the expressions (1.2.5) and (1.2.5) we can ask the question of what happens to the statistical fluctuations in the thermodynamic limit. Our discussion around equation (1.2.5) along with the results of equations (1.2.5) and (1.2.5) leads us to the conclusion that

| (110) |

and therefore

| (111) |

We see that even though we might be fixing different quantities in the three statistical ensembles, they all describe the same physics in the thermodynamic limit with the corresponding statistical fluctuations becoming unimportant.

1.3 Relations Between Ensembles

We have seen that in the thermodynamic limit, the microcanonical, the canonical and the grand canonical ensembles all lead to the same description. In this subsection we will make the connection between the different ensembles manifest even without taking the limit. In order to do this quantitatively, we need to identify appropriate quantities for each ensemble. Table 1 outlines the correspondence we are going to establish.

| Ensemble | Important Quantity |

|---|---|

| Microcanonical | Number of microstates |

| Canonical | Partition function |

| Grand Canonical | Grand canonical partition function |

-

•

Grand Canonical Canonical

We start by showing the correspondence for the quantum mechanical case noting that the classical case is analogous. The canonical partition function can be written as,

(112) where is the particle number of the state . This allows us to write the grand canonical partition function

(113) where in the fifth line we used equation (112) and we also defined the fugacity . Equation (• ‣ 1.3) is the equation defining the correspondence we promised earlier. Notice that we can also invert equation (• ‣ 1.3) to write,

(114) -

•

Microcanonical Canonical

In order to establish the correspondence between the microcanonical and the canonical ensembles, we will need to introduce the energy density of microstates . Upon integration between e.g. and , this quantity will yield the number of microstates between and . For the classical case, coincides with i.e.

(115) For the quantum mechanical case, where the spectrum of energy is discrete , the density of microstates is

(116) Indeed, integrating between and we obtain,

(117) as we would expect.

The density of microstates ,carries the same information with the function which is the interesting quantity of the microcanonical ensemble. In the present context, we can use it to connect the canonical to the microcanonical ensemble. For the quantum case we can write,

(118) We see that the canonical partition function is the Laplace transform of the density of microstates. The same result is also true for the classical case. To see this, we can write

(119) We therefore see that independently of whether we are dealing with a classical or a quantum system, we must have

(120)

The Laplace transformation in equation (120) has very similar form with a Fourier transformation for which we know that an inverse transformation exists. The inverse Laplace transformation would allow us to solve for the density of states in of the canonical partition function . To see how the inverse transformation works, we multiply both sides of equation (120) by and ingrate over a contour in the complex plane,

| (121) |

Choosing the contour so that , the integrand of the right hand side is an analytic function and we can exchange the order of integration to write. Moreover, if we choose to be the contour with a fixed positive real number and we have that . The right hand side simplifies after writing

| (122) |

In the third line we used the identity

| (123) |

We can therefore write

| (124) |

which is independent of as long as as .

2 Classical Gases

In this section we will study a class of simple classical systems, the classical gases. A gas consists of a set of particles inside a finite volume which are either non-interacting or weakly interacting. In the non-interacting case, the total Hamiltonian can be written as,

| (125) |

where is the single particle Hamiltonian and are vectors of conjugate variables parametrising the degrees of freedom of a single particle. For example, as we will see in a later section, for a diatomic gas they can parametrise the position of the center of mass of the molecule, the rotations of the atoms around the center of mass and the oscillatory modes of the atoms around the relative position of equilibrium.

One of the interesting aspects of this section is that if we follow the rules of classical mechanics we will have to confront a number of puzzles which are naturally resolved in quantum mechanics:

-

•

What is the fundamental origin of the ”funny” we need to divide by every time we integrate over a conjugate pair of variables. This question has already shown up several times.

-

•

Distinguishability of identical classical particles and the extensive property of the Gibbs entropy don’t go together. This will lead us to the Gibbs paradox.

-

•

The classical prediction for the specific heat of dilute gases qualitatively disagrees with experimental measurements.

Regarding the first bullet point, for most quantities we might want to compute through the partition function, the constant would drop out. This is happening because we are always considering the logarithm of the partition function in order to computer the free energy . Since would only appear as a denominator in the partition function, when we take the derivatives of the dependent factor would drop out. However, quantities that directly depend on include the free energy itself and the entropy. Therefore changing the the value of would actually change the entropy which has a certain physical meaning. As we will see when we study Quantum Gases has a very specific value which cannot be fixed by the classical theory itself.

The second bullet point is perhaps the most striking of all apart from the connection with reality. It has its roots at a very fundamental level related to how classical identical particles work. The core of the problem is how we count inequivalent configurations of identical particles. For example, we consider the case of two identical classical free particles moving in 1 dimension. We can label the microstates of this system by using the positions and conjugate momenta . Classically, the configurations which are related by the simultaneous permutation of positions and momenta produce a different microstate since classical particles are distinguishable.. This is exactly how we got the classical partition function (21) after summing all inequivalent microstates. However, as we will see this is incompatible with our understanding of the properties of thermodynamic entropy and how these should come out of the Gibbs entropy we defined in subsection 1.1.5. In a later section we will see that the correct answer will come after considering identical particles as indistinguishable and by identifying microstates which can be seen as permutations of particles. This leads us to consider the partition function of indistinguishable classical particles,

| (126) |

2.1 The Ideal Gas

We have already seen the thermodynamic description of an ideal has in the Michaelmas term. Here, we will derive its equation of state starting from a microscopic description. From a microscopic point of view, the ideal gas is nothing but a system of non-interacting particles confined inside a volume . The single particle Hamiltonian is simply,

| (127) |

yielding the -body Hamiltonian,

| (128) |

The single particle partition function is then

| (129) |

where we defined the de Broglie wavelength

| (130) |

Notice that the above formula for the partition can have the phenomenological interpretation of how many boxes of side can fit inside our volume .

From the way that we computed the single particle partition, it should be fairly obvious that for a particle in dimensions the partition function would be

| (131) |

This will be a useful result later on when we discuss the energy equipartition theorem. A couple of remarks we would like to make are:

-

•

This de Broglie wavelength is a characteristic scale for a particle of mass at temperature .

-

•

The partition functions we have compute contain factors of which is a Quantum Mechanical quantity. However, we used classical physics to derive our expressions and we should be worried about the validity of our results every time we compute a quantity from the partition function from which doesn’t drop out.

After the above comments we can write the partition function for the -body problem

| (132) |

Here we have introduced the function which is equal to for distinguishable particles equal to for indistinguishable particles. The point is that it will drop out in the final expressions we will be interested in this section.

The free energy can be simply computed by using our result in equation (1.1.8) to give,

| (133) |

From the above, we can now use equation (70) to compute the pressure,

| (134) |

which is precisely the equation of state we are after,

| (135) |

This is certainly a result that we can trust since drops out from the final expression. Moreover, observe that the statistics of our particles wouldn’t alter this result since the the function drops out.

2.2 Energy and the Equipartition Theorem

Before we state the equipartition theorem in its full generality, it is useful to compute the average energy of the ideal gas by combining equations (1.1.4) and (132). A simple computation gives

| (136) |

This is another quantity from which drops out and we expect to trust it in the regime where our original Hamiltonian is correct. The same is true for the statistics of our particles as the function does not appear in the final result. By staring at the above it appears as if each of the degrees of freedom of the ideal gas contributes by to the average energy. This observation for the ideal gas is a special case of a much more general theorem.

The equipartition theorem is a powerful result which states that in thermal equilibrium every canonical variable (such as position of momentum) which appears only quadratically in the Hamiltonian contributes by to the average energy and therefore by to the heat capacity of the system.

In order to prove this we consider a system of particles with degrees of freedom. The corresponding canonical pairs of variables are with . Suppose that out of these canonical variables are quadratic i.e. they appear quadratically only in the Hamiltonian. This naturally splits our canonical variables to the ’s which are quadratic with and the non-quadratic ’s with . This allows us to write the Hamiltonian in the form,

| (137) |

with positive functions of and represents the non-quadratic part of the Hamiltonian.

The partition function can be now written as,

| (138) |

The factor encodes all the dependence of the partition function on the non-quadratic variables. The average energy can now be computed by using equation (1.1.4) to find,

| (139) |

The above shows precisely that each of the quadratic degrees of freedom contributes by to the average energy of the system.

2.3 The Gibbs Paradox and Indistinguishability

In this section we will see that the rules of classical physics lead to an incompatibility between thermodynamics and the thermodynamic limit of statistical mechanics. In order to see this, we will consider the simplest system possible, the ideal gas. For the time being, we will consider an system of distinguishable identical gas particles of mass inside a volume . As we saw in section 2.1, the partition function is given by (132). Combining with equation (• ‣ 1.1.5) we obtain the Gibbs entropy,

| (140) |

with as defined in equation (130). This expression for the entropy is not compatible with the extensivity we would expect to see. Indeed, for any dimensionless constant we have,

| (141) |

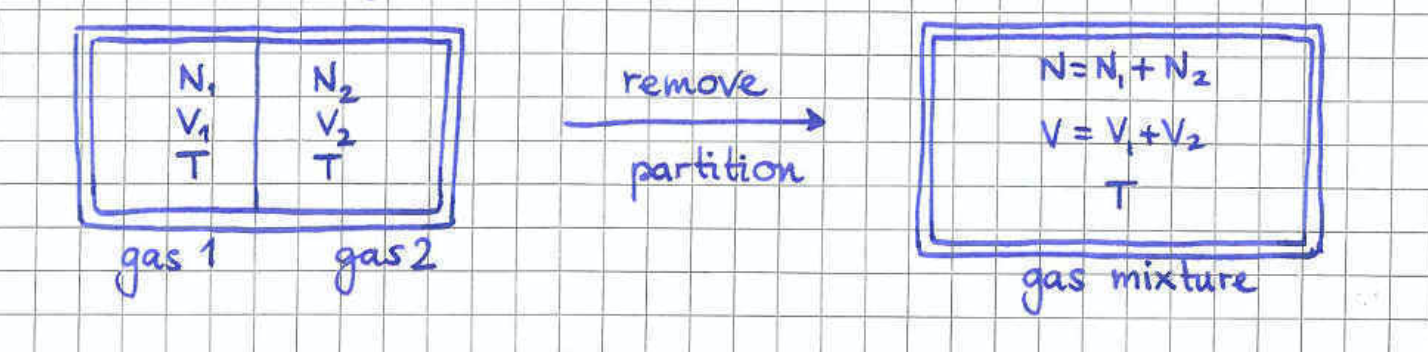

The fact that we find a non-extensive entropy even for such a simple system, is rather worrying but also very telling. It simply means that we have done something wrong at a very fundamental level. The deeper issue is more amplified when we consider the mixing of two gases. The scenario we have in mind is depicted in figure 4 where two gases which are held at the same pressure and temperature are initially separated by a wall. The wall is then removed resulting in the mixing of the two gases. In the following we will examine two different cases depending on whether the gas particles are identical or not.

-

•

The case of not identical gas particles

For simplicity, we can assume that the particles of the two different gases have the same mass but different colour e.g. red for the left chamber and green for the right one. In this case, as soon we remove the wall the two gases will mix and there is no way we can bring the system to its original state. We wouldn’t be able to put the wall back and expect that all red particles will be on the left and all the red ones will be on the right. This is therefore an irreversible process and from basic thermodynamics the total entropy of the system has to increase. This implies that the mixing entropy

(142) will be a strictly positive number. We see that the mixing of non-identical and therefore distinguishable particles would necessarily lead to positive mixing entropy.

-

•

The case of identical gas particles

We now examine the case where the two gases are composed of identical atoms. Since the two gases before mixing are at the same pressure and temperature , they will also have the same density . This implies that after removing the wall, the mixture will retain the same density . It is then obvious that if we put the wall back in its original position, the system will go back to its original thermodynamic state with two gases of particle numbers and inside volumes and held at temperature . However, from the microscopic point of view, some of the particles which were original inside the left/right chamber have now relocated to the right/left chamber. From the point of view of classical mechanics this is a very different state from the original configuration. Nevertheless the above argument shows that the removal of the wall is a thermodynamically reversible process and therefore the mixing entropy should be zero.

Let’s for a moment pretend that the expression (140) for the ideal gas entropy is correct. This allows us to compute the initial entropy

(143) and the final entropy

(144) The above yield the mixing entropy

(145) which can be easily seen to be a positive number, contrary to our expectation our thermodynamics argument. This is a good point to link this paradox to the non-extensivity of the entropy formula (140). The mixing entropy is,

(146) which would be zero if was an extensive function of and .

This mismatch between the Gibbs and the thermodynamic entropy is known as the Gibbs Paradox. Gibbs proposed that the paradox is resolved if classical microstates related by permutations of identical particles are treated as the same microstate. Therefore, identical classical particles should be treated as indistinguishable.

In more practical terms, whenever we need to count or sum over microstates, we can take all of the classically inequivalent microstates into account and then divide by , the number of permutations of objects. This is essentially the rule we wrote in equation (126). In the case of non-interacting identical particles then, for the particle partition function we can write,

| (147) |

where is the single particle partition function. More generally, we have

| (148) |

where is the total number of degrees of freedom in the system and is the full classical phase space.

Here, we need to raise a point of caution about the validity of the ”” prescription. This works only for indistinguishable particles with a continuous set of microstates. To illustrate the issue, let’s consider a system of just two particles each of which is described by one position coordinate and its conjugate momentum . The ”” prescription would correctly make configurations which have to configurations which have . However, this division is not necessary for mictostates which have and . The point here is that the configurations which are permutation invariant live on a hypersurface on which doesn’t give a finite contribution to e.g. the partition function since it is a set of measure zero.

2.4 Non-interacting Indistinguishable Particles in the Grand Canonical Ensemble

In this section we will consider a system of non-interacting indistinguishable particles in the grand canonical ensemble where we keep , and fixed. As we discussed in sections 1.2.2 and 1.2.3, the important quantities are the grand canonical partition function and the grand canonical potential .

Since we don’t have any interactions between our indistinguishable particles, for the -particle canonical partition function we can write

| (149) |

where is the single particle partition function. Using this equation along with the relation (• ‣ 1.3) between the canonical and the grand canonical partition functions we obtain,

| (150) |

According to equation (99), the grand canonical potential is,

| (151) |

We can now use the relation (1.2.4) to compute the average particle number,

| (152) |

We now consider the thermodynamic limit where to obtain an expression for the chemical potential in terms of the particle number, temperature and volume,

| (153) |

The previous result is cute but we in order to learn something more about physics we are going to consider the case of the ideal gas. In that case the single particle partition function is given in equation (2.1) with the de Broglie wavelength defined as in (130). Combining these equations with our previous expression for the chemical potential we obtain,

| (154) |

Equation (154) gives that the chemical potential is negative whenever . This coincides with the classical regime where the de Broglie wavelength is much smaller than the length of the cubic space available to each particle. In the opposite case quantum effects would start becoming important and the classical description would break down.

At first sight, a negative chemical potential might sound counter intuitive sind the chemical potential is the change energy when adding a particle. However, this is meant to happen at fixed volume and entropy. The fact that the chemical potential is then negative suggests that we need to decrease the energy of the system in order for the entropy to remain unchanged after the increase in the particle number.

2.5 The Maxwell Distribution

The Maxwell distribution gives the probability density for a gas atom to be found with speed when temperature and volume are kept fixed. In order to derive it for the ideal gas, we can start from the Boltzmann distribution of equation (20).

Suppose that we are interested in the speed probability distribution of the -th particle in our system with position and momentum . The first step is to obtain a probability density for the momentum of that specific particle by integrating over the its position as well as the positions and momenta of the rest of the particles in the system. This procedure would give,

| (155) |

where the fact of comes from integrating over the coordinates of our particle. The extra factor of comes from the fact that we are interested in a density which is supposed to be integrated over momenta. One can see that this is dimensionally sensible since has units of . The next step is to obtain the probability density for its velocity by using the relation . Starting from the infinitesimal probability in momentum which we obtain by multiplying the probability density with the momentum space volume element we have,

| (156) |

From the above we can read off the relation,

| (157) |

The final step is to use the velocity probability distribution in order to derive the Maxwell distribution which is a density in the velocity norm,

| (158) |

In order to do this, we move from the Cartesian coordinates to spherical ones parametrising the norm and the direction of with and . Doing so we obtain the infinitesimal probabilities,

| (159) |

where we used the fact that only depends on the norm of the velocity vector . The above shows that the Maxwell distribution we are after is,

| (160) |

which can be checked to be correctly normalised according to,

| (161) |

Moreover, we can compute,

| (162) |

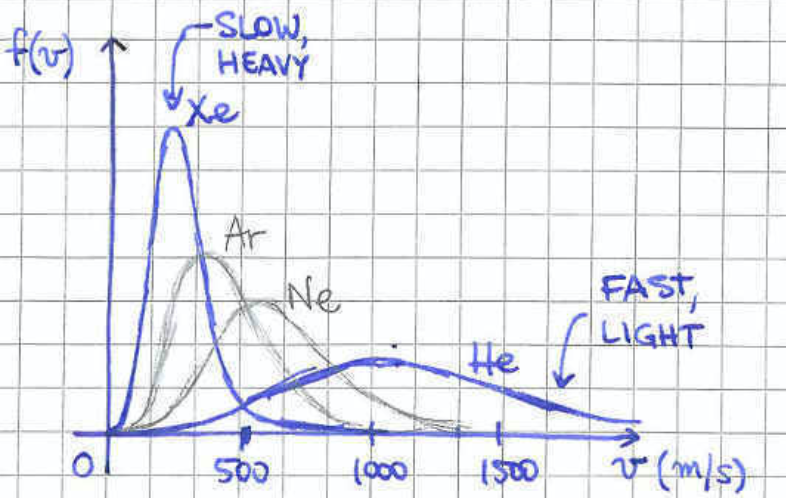

allowing us to compute the average energy , agreeing with the results of section 2.2. As we can see, the average energy is solely fixed by the temperature, independently of the mass of the particle. This is compatible with the form of the distribution (2.5) which for fixed temperature suggests that heavier particles are more likely to have lower speed that heavier particles. Plotting the distribution (2.5) for different masses at a given temperature gives the picture of figure 5.

We have derived the Maxwell distribution from first principles, starting form the ideal gas. However, when Maxwell derived it didn’t know about statistical mechanics. He only relied on:

-

•

Rotation symmetry

-

•

Statistical independence of the velocity components , and

Rotational symmetry, or more precisely isotropy, implies that the probability density for each velocity component is given by the same function. Statistical independence implies then that the probability to find the particle with velocity in the parallelogram is

| (163) |

Going once again to spherical coordinates we should be able to write,

| (164) |

where is the norm of . Equating the two expressions above, and transforming the volume element we obtain the constraint,

| (165) |

The above constraint can only be satisfied by a of the form,

| (166) |

The velocity probability density is then given after integrating over the angular coordinates and to obtain,

| (167) |

This expression has the same function dependence on with the function we derived in equation (2.5). We are left with two unknown constants which can be fixed by imposing the following two constraints,

-

•

-

•

2.6 The Diatomic Gas

In this subsection we will study a system of non-interacting diatomic molecules. This will let us see the importance of Quantum Mechanical effects in real world systems. More specifically, our aim is to compute the heat capacity of a diatomic gas as a function of temperature and compare it to experimental results. The resulting discrepancy has been one of the biggest puzzles of the 19th century. Here, we will use it to understand the limitations of the classical description of nature.

Since the system is non-interacting, we will focus on a single molecule in order to derive the single particle partition function. Our first step is to construct the Hamiltonian describing our molecule. A simple model for our molecule is based on the following assumptions:

-

•

Two identical atoms of mass at positions and which are interconnected by a spring of natural length . The potential between the two particles is then .

-

•

The vibrations of the molecule are not too wild. In mathematical terms, the distance between the two atoms is very close to the natural length of the spring and .

The above picture suggests that the degrees of freedom of a single molecule can be separated to translations of the whole molecule and rotations as well as vibrations which happen in the rest frame of the center of mass.

In full generality, the Lagrangian of the molecule is,

| (168) |

As is common in cases where the interaction potential has translational invariance, it is now useful to introduce the center mass by changing variables according to,

| (169) |

and is half of the vector pointing from one atom to the other. This leads to the Lagrangian

| (170) |

where is the total mass of the molecule. The first term is really the Lagrangian of the center of mass which due to translational invariance behaves like a free particle of mass . The second and the third terms govern the relative motion of the two atoms. We now want to separate the rotations from the vibrations by using spherical coordinates according to,

| (171) |

with and . This change of variables gives us the action

| (172) |

This Lagrangian is still exact without having used the approximation of small vibrations. Moreover, we see that the rotational and vibrational degrees of freedom are coupled due to the factor in front of the second term. This is precisely where the assumption of small vibrations is going to be useful. By setting we are essentially decoupling those two degrees of freedom. After this assumption, the Lagrangian takes the form,

| (173) |

After this split, we can now derive the Hamiltonian of our molecule term by term with a very clean interpretation.

-

•

for translations with respect to the degrees of freedom

The conjugate momentum is

(174) yielding the Hamiltonian,

(175) -

•

for rotations with respect to the degrees of freedom

The conjugate momenta are,

(176) The corresponding Hamiltonian is then,

(177) where we have defined the angular moment of inertia .

-

•

for vibrations with respect to the degree of freedom

The conjugate momentum is,

(178) The corresponding Hamiltonian is then,

(179)

After this derivation, the full Hamiltonian that we are going to need is,

| (180) |

For the single particle partition function we can then write,

| (181) |

In the last line we have decomposed the partition function into a translational, a rotational and a vibrational factor. Having in mind the heat capacity, our first step is compute the average energy of the -body system whose partition function is

| (182) |

Using our formula (1.1.4) for the average energy of the canonical ensemble we have,

| (183) |

The above shows that the factorisation of the partition function in equation (2.6) sharpens the contribution of each degree of freedom to the average energy. Going back to our computation we can easily compute

| (184) |

where the de Broglie wavelength is as defined in equation (130). After this, the contributions of the individual degrees of freedom to the average energy are,

| (185) |

Notice that we could had reached these conclusions about and solely from the equipartition theorem of subsection 2.2. After using the expression of equation (29), we obtain the corresponding heat capacity contributions

| (186) |

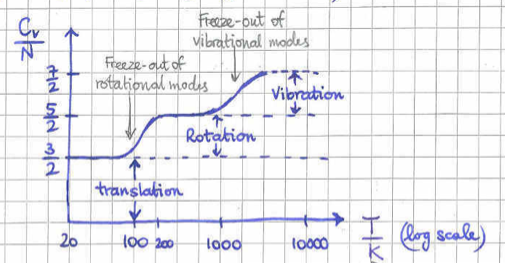

yielding the total heat capacity . We see that all degrees of freedom contribute the same amount to the heat capacity, leading to a temperature independent constant. However, this result disagrees with the experimental data shown in figure 6. It is clear that as the temperature is lowered the different degrees of freedom freeze-out offering no contribution to the specific heat. This is purely a Quantum Mechanical effect, tied to the fact that the energy levels of a Quantum system are discreet.

To see the effect of freezing out for the vibrational degrees of freedom, it is useful to remember the specific heat in the example of the Quantum Mechanical simple harmonic oscillator of frequency . The formula we derived in the corresponding homework problem was,

| (187) |

From this formula we see that the specific heat interpolates between at down to zero as we take the limit . The crossover between the two behaviours is when . In more physics terms, this is when the typical thermal energy in the system is approximately equal to the energy difference between the quantised energy levels of the system which go like . Most importantly this is the energy gap between the ground state and the first excited state.

2.7 Weakly Interacting Gases - The Virial Expansion

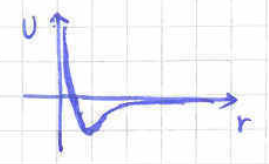

So far we have studied gases of non-interacting particles. In this subsection we will consider gases with non-zero interparticle interactions which are under control. A reasonable class of interactions to study vanish when the particles are too far away from each other and lead to repulsion when particles get too close. An example of such a potential is depicted in figure 7 where we can see that there is a characteristic distance above which the potential is very small. Two physically interesting potentials of this class are the Lenard-Jones potential

| (188) |

and the hard-core potential,

| (189) |

Given the above constraints, it is natural to guess that the effects of these interactions on the statistical behaviour of our gas is going to be controlled by the density . Intuitively, we expect that the equation of state will have the leading behaviour of the ideal gas as in equation (135) and that it will receive corrections according to the Virial expansion,

| (190) |

The temperature dependent Virial coefficients can be determined from first principles by following the Mayer cluster expansion. Here, we will only determine in terms of the inter-particle potential .

The see how this works, we first write down a general Hamiltonian of identical interacting particles,

| (191) |

where we have defined . Thinking of indistinguishable particles, the partition function in equation (148) is no longer factorisable due to interactions. However, after integrating over momenta it takes the form,

| (192) |

where is the de Broglie wavelength (130). In the absence of interactions with , the remaining integral over positions would give a factor and we would recover the ideal gas partition function of equation (132).

We need to find a good expansion scheme in order to approximate the remaining integral in the partition function. A naive guess would be to expand the exponential assuming a small value for the exponent. This would fail due to the fact that no matter how high a temperature we consider (small ), at small distances the potential would blow up. A highly non-obvious expansion comes after introducing the Mayer function,

| (193) |

which is always bounded and vanishes at infinity. More precisely, given the generic properties of our potential we have and . Moreover, above the characteristic scale above which our potential vanishes, the Mayer function is very small.

Before we go back to the computation of the integral in the partition function (192), it is useful to appreciate the benefit of introducing the Mayer function. A simple way to see this is by considering the integral,

| (194) |

In the above expression, the difficult integral has been split into two terms. The first one is equal to the volume . However, since the Mayer is approximately zero for , the second term will be of order . And therefore has effectively helped us expand a non-trivial integral to a large term and a suppressed one. Moreover, since is approximately zero outside the volume , we can approximate integrals of over the volume by,

| (195) |

To carry out the integral in (192) we introduce the notation allowing us to write,

| (198) |

The first term in the integrand will simply give after performing the integral over all particle positions. According to the discussion of the previous paragraph, each power of the Mayer function in the other terms will be lowering the powers of volume by one. At this point it is natural to guess that the second term will offer the second Virial coefficient in the expansion of equation (190) and so on. Therefore, for our purposes it is enough to consider the first couple of terms in the integrand.

After noting that the second term gives a sum of equal terms we can write,

| (199) |

where in the second line we change variable of integration according to,

Putting everything together, we have that

| (200) |

where we recognised the partition function of the ideal gas of equation (132) for .

Since we want to compute the pressure of our gas, it is useful to compute the free energy. According to equation (1.1.8) this is given by,

| (201) |

We can now evaluate the pressure by using equation (70),

| (202) |

Comparing the above expression with the Virial expansion (190) we can read off the second Virial coefficient,

| (203) |

The above gives us an expression in terms of the potential and the temperature .

As an example, we will apply formula (203) for the case of the hard-core potential in equation (189). For this case, the Mayer function we need to integrate is,

| (204) |

After introducing spherical coordinates and integrating over the angular directions we obtain,

| (205) |

We see that the second integral, coming from the region of attraction, is still quite non-trivial in case we want to obtain a closed form for it. However, since the integrand is bounded in the whole region, we can consider the high temperature limit . In this limit, we can expand the exponential at any value of to obtain,

| (206) |

where we have defined and . At this point it is useful to point out that comes from the repulsive region of the potential while represents the attractive.

Putting everything together with equation (190), the hard-core potential leads to the equation of state

| (207) |

This is our final answer but it is instructive to bring the equation of state in a slightly different form, the Van der Waals equation of state for an interacting gas. We have,

| (208) |

where in the second line we used the fact that is a small number in order to approximate,

| (209) |

It is now interesting to compare the Van der Waals equation (2.7) to the equation of state of the ideal gas,

| (210) |

It looks as if we have effectively replaced the volume by which is the volume available for a particle to explore due to the repulsion from the other particles. Moreover, the attractive part of the potential results in a reduction of the pressure, given by the term in (2.7) which is proportional to .

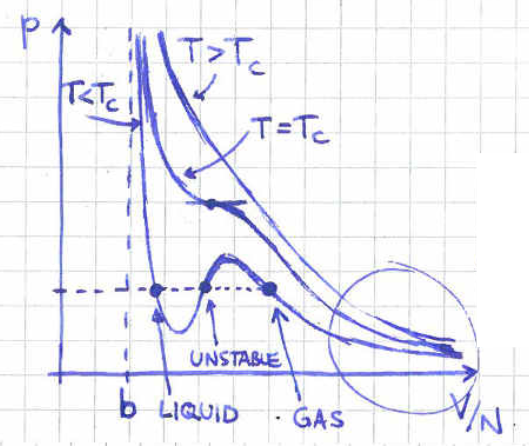

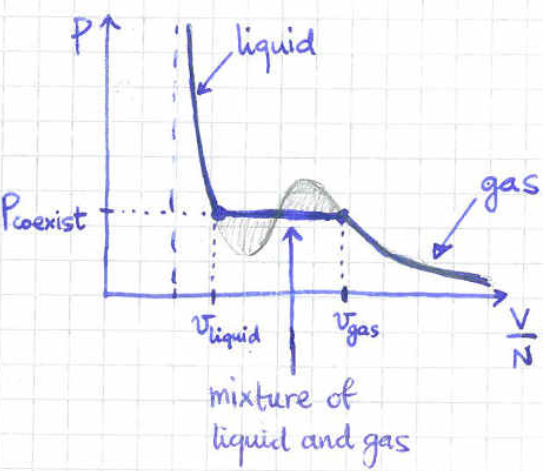

As an aside that the 4H students will study in more detail, another interesting feature of the Van der Waals equation of state (2.7) is the fact that it can capture a phase transition. To see this, in figure 8 we plot different isotherms in the diagram of equation (2.7). The diagram shows that there is a certain critical temperature below which the isotherms have three possible values of volume at fixed pressure.

The middle point is thermodynamically unstable since in that region the pressure seems to decrease as we decrease the volume. The volume represents the liquid phase while the volume represents the gas phase of our system. So, which point is the correct one? The answer is that the whole segment of the isotherm between the volumes and needs to be replaced by a flat line, as in figure LABEL:vdw2. The point of equilibrium between the liquid and the gas phase is determined by the Maxwell construction.

3 Quantum Gases

In the previous (especially in section 2.6), we came to appreciate that the discreteness of the energy levels in quantum Mechanics leads to a drastic change of thermodynamic systems at low temperatures. This is one consequence which is of course already there even in single particle systems. It was certainly interesting how this is propagated in the collective behaviour of large scale systems.

The first aim of this section is to provide a proof of how one can arrive at the classical partition function (21) after taking an appropriate limit of the quantum partition function (8). As we have mentioned before, the constant that appears in (21) was introduced by hand in order to make the partition function dimensionless. Here we will see how this constant naturally emerges from the classical limit of (8) and this is going to be identified with Planck’s constant which is secretly hiding in the energy levels of the quantum system.

The second aim of this section is to highlight even more striking quantum effects related to the very nature of quantum many body systems. In the previous sections, as far as many body systems are concerned, quantum mechanical particles were treated on equal footing with classical ones with the only difference being the discreteness of their energy levels. The aim of this section is to provide a more precise treatment of identical particles from the point of view of quantum Mechanics. As we will see, depending on how we can populate the energy levels in a many body system, quantum particles are divided into two large categories, the Bosons and the Fermions. As we will see, at high temperatures non-interacting gases of Bosons or Fermions look very similar to the classical ideal gas of section 2.1. However, at low temperatures they form two very different states of matter.

3.1 From Quantum to Classical

This section is for your own education since it assumes previous knowledge of quantum mechanics. In order to keep the discussion as simple as possible we will focus more on the single particle partition function in one spatial dimension. This is enough to illustrate the basic concepts and especially to show how the quantum mechanical origin of the factor in the classical partition function (21).

The basic ingredients in our derivation will the the three eigenbasis , , corresponding to the position operator , the momentum operator and the Hamiltonian . As we know, these have to satisfy the eigenvalue problem equation,

| (211) |

At this point it is useful to remember the normalisation conventions,

| (212) |

according to which eigenvectors corresponding to continuous spectra are normalised with Dirac’s delta while eigenvectors of discreet spectra are normalised with Kronecker’s delta. Moreover, we will find useful the inner product,

| (213) |

which can be derived from defining equation of the momentum operator

| (214) |

Since these are eigenbasis of Hermitian operators, we know that they must be complete. Given the normalisation of equation (212) we have three independent completeness relations,

| (215) |

where is the identity operator in our Hilbert space.

Given a particular discreet orthonormal basis , it is useful to define the trace of an operator according to,

| (216) |

For a continuous basis we trace would be,

| (217) |

An important fact that our derivation will use is the fact that the trace is basis independent. Indeed, we can use the completeness relations (215) along with the orthogonality conditions (212) to show that e.g.

| (218) |

In the second and fifth lines we used twice the completeness relation (215) and in the penultimate line the normalisation (212) yielded Dirac’s delta.

We now define the thermal density matrix which helps us write the quantum partition function (8) as a trace,

| (219) |

In the above derivation we used that since is an eigenvector of with eigenvalue . The independence of the basis now lets us write the trace in terms of the coordinate basis,

| (220) |

We are interested in the Hamiltonian of a particle moving inside a potential which allows us to write the quantum mechanical Hamiltonian,

| (221) |

Since is an eigenvector of we know that . It is therefore very tempting to write the thermal density matrix that appears in the integrand of equation (220) as the product of and . Of course, we know that we cannot do this since and do not commute. In order to achieve something like this we would have to use the Zassenhaus identity,

| (222) |

with the dots hiding higher order commutators between and . In our case we have and . However, we know that every commutator of with would produce a factor of which we will take to be small in the classical approximation. Since we are interested in the classical limit, it is perfectly fine to approximate

| (223) |

This allow us to approximate the partition function in (220) according to,

| (224) |

In the last line we see that the leading term in an -expansion is the classical single particle partition function with the correct factor of ! It is probably good to give some comments about the various steps in the above derivation. In the fourth line we used the completeness relation (215) in terms of the momentum eigenvectors which allowed us to exploit the fact that in the line below. In the penultimate line we simply used that inner result of equation (213) for the inner product of with .

3.2 Single Particle in a Box

This subsection is a prelude before we consider non-interacting gases of Bosons and Fermions. The aim is to come up with a precise description of the single particle microstates of a particle inside a box of side .

To set up the problem of finding the microstates, we need to solve the eigenvalue problem,

| (225) |

with periodic boundary conditions on the sides of the box,

| (226) |

A more natural set of boundary conditions would be that the wavefunction vanishes on the sides of the box but the physics will remain unchanged.

According the the general rules of Quantisation of classical systems, the Hamiltonian of the free particle can be found by replacing the classical momentum according to,

| (227) |

This suggests that for a non-relativistic particle we would have

| (228) |

An effective Hamiltonian we can write down for a relativistic particle is,

| (229) |

In both cases, we see that the Hamiltonian is function of the Laplace operator . It is therefore easy to see that the wavefunctions that solve the eigenvalue problem equation (3.1) are of the form

| (230) |

for an arbitrary vector and we have included an appropriate normalisation factor for our wavefunction so that,

| (231) |

Plugging the eigenfunction (230) in the eigenvalue problem equation (3.1) we can extract the eigenvalue for the energy in terms of the vector . For the non-relativistic particle with Hamiltonian (228) we have

| (232) |

where is the modulus of the wave-vector. For the relativistic particle with Hamiltonian (229) we have,

| (233) |

So far, we haven’t implemented the boundary conditions (226) of our problem. Using the wavefunction (230), we see that this implies a quantisation condition for the wavevector,

| (234) |

We therefore see that the microstates of a free particle inside a box are parametrised by three integers and the corresponding energy levels given by (232) and (233) are discreet as we would expect for a quantum system in a finite volume.

To summarise the important results of this subsection:

- •

-

•

The single particle quantum states are labelled by three integers with the discreet wavevector given by (234). The corresponding discreet energy levels are given by .

3.2.1 Density of Single Particle States

Another important quantity we would like to study is the density of states for a single free particle moving inside a macroscopic box. The density of states for a Quantum System was first introduced in equation (116) of section 1.3. Here, we will approximate the function (116) in the case of a particle moving inside a box whose side is much greater that the de Broglie wavelength (130) of the particle. To appreciate this last point we point out the single particle partition function

| (235) |

By simply staring at the dimensionless exponent, we see that we are indeed comparing the de Broglie wavelength to the macroscopic lengthscale of our container.

One way to find the density of states is by simply using the definition (116). For the single particle in a box that would simply be,

| (236) |

However, we are going to take a slightly different approach. We will remember that the density of density is designed so that for any function of energy which we are summing over all microstates we can write,

| (237) |

In order to approximate the energy density of states, we imagine that we need to perform a sum whose terms depend only on the energy levels,

| (238) |

where we introduced the spacing of the quantisation condition in equation (234) and the volume . In the large volume limit we see that and we can approximate the sum by an integral in three dimensions,

| (239) |

where in the second line we exploited the fact that energy only depends on the modulus of the wavevector and we introduced spherical coordinates. Integrating over the angular directions yielded a factor of . In order to read off the density of states, we will now trade the modulus of the wavevector for the energy by inverting the dispersion relation to write . Our integral then becomes,

| (240) |

This suggests that, according to the alternative definition of equation (237), in our approximation the density of states is

| (241) |

allowing us to write,

| (242) |

Of particular interest to us is going to be the non-relativistic particle. Inverting its dispersion relation (232) gives which we can use in (241) to obtain the density of states,

| (243) |

Applying the same logic for the relativistic particle and its dispersion relation (233) we obtain the density of states,

| (244) |

3.3 Bosons vs Fermions

Apart from their mass and electric charge, elementary particles are characterised by an additional number, the spin which is a purely quantum mechanical property. Bosons have integer spin while Fermions have a half-integer spin.

The Higgs field is a spin zero particle while examples of spin particles are the electron, the quarks and the neutrinos. Spin particles include the photon which is responsible for the electromagnetic interaction and the gluons which carry the strong nuclear force of QCD. There are no known spin elementary particles while the graviton which is the particle that mediates the gravitational force has spin equal to .

From the point of view of statistical physics, Bosons and Fermions follow very different rules. From a more fundamental point of view, this is a consequence of the Spin-Statistics which requires the techniques of Quantum Field Theory. From the point of view of quantum mechanics it simply states that when exchanging two:

-

•

Fermions, the quantum state vector is antisymmetric

-

•

Bosons, the quantum state vector is symmetric

Reading these statements slightly differently we can say that,

-

•

Two identical Fermions cannot occupy the same single particle microstate

-

•

Two or more identical Bosons can occupy the same single particle microstate

To summarise what we have seen so far in this course we can have three different kinds of identical particles:

-

•

Distinguishable particles

-

•

Indistinguishable Fermions which cannot occupy the single particle microstate

-

•

Indistinguishable Bosons which can occupy the single particle microstate

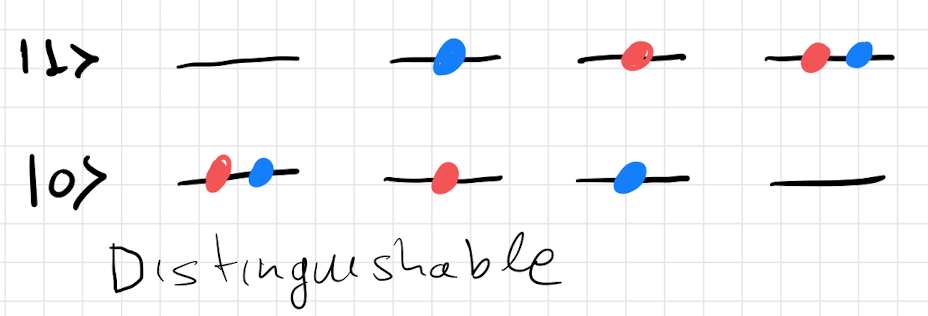

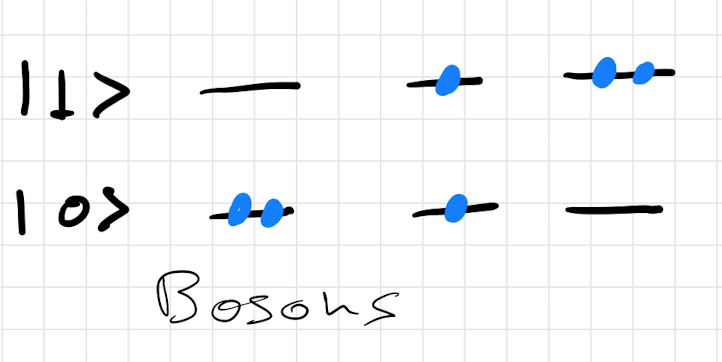

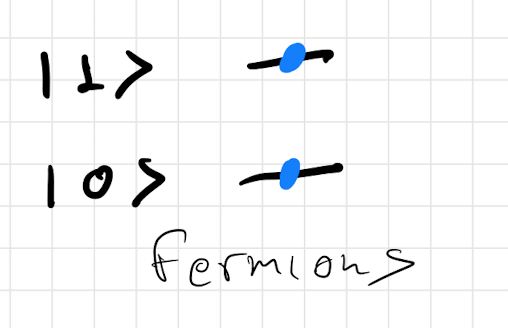

As an example of this would work. We will consider a simple system which has two single particle states and . We then want to list the different configurations we can come up with for the three different classes of particles. The corresponding configurations are shown in figure 10.

3.4 Bosons

In this subsection we will consider a general system of non-interacting Bosons. In order to give a general description, we will denote the -th single particle microstate by and the corresponding energy level by . Since Bosons are indistinguishable, the microstates of a system of particles will be characterised by an infinite number of integers equal to the number of particles in the single particle microstate . The integers are called the occupation numbers of the single particle microstates. The constraint these integers need to satisfy is,

| (245) |

yielding the corresponding total energy,

| (246) |

The -body canonical partition function can be written as,

| (247) |

where the sum is performed over all set of integers satisfying the constraint (245).

This sum is highly non-trivial and the situation is significantly simplified by considering the grand canonical ensemble instead as we will need to sum over . To see this simplification, we recall the relation of equation (• ‣ 1.3) between the grand canonical and the canonical partition functions,

| (250) | ||||

| (253) | ||||

| (254) |

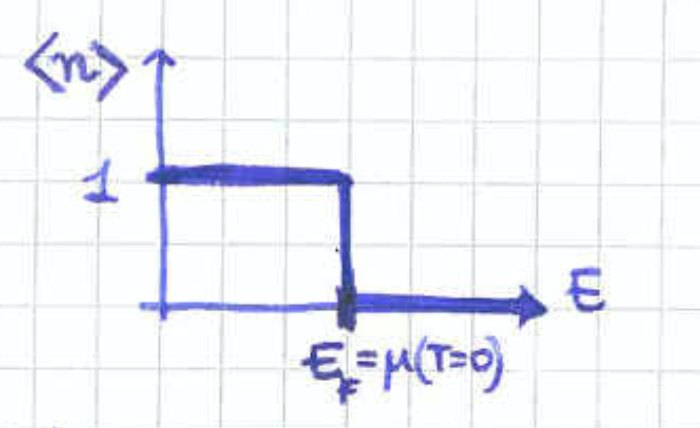

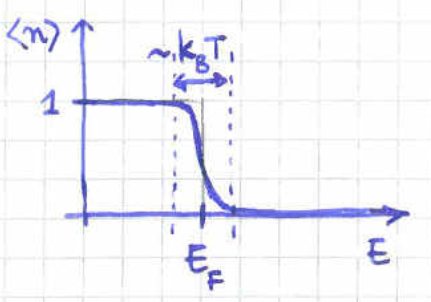

In the above, we defined the grand canonical partition function corresponding to the single particle microstate according to,

| (255) |