2 The action principle

We will start with the Lagrangian formulation. The underlying physical principle behind this formulation can be traced back to the idea that for some physical processes, the natural answer to the question “what is the trajectory that a particle follows” is something like “the most efficient one”. Our goal in this section is to understand, in a precise sense, how to characterize this notion of efficiency.

A fundamental example is the free particle in flat space: its motion is along a straight path. What makes the straight path special? The answer is well known: the straight path is the one that minimizes the distance travelled between the origin and the destination of a path. This is equivalent to saying that the motion of the particle is along a trajectory that, assuming constant speed for the particle, minimizes the total time travelled.

This second formulation connects with Fermat’s principle, which states that the path that a ray of light takes, when moving on a medium, is the one that minimizes the time spent by the light beam. Or more precisely, one should impose that the time is stationary (we will define this precisely below) under small variations of the path.

These two examples suggest a natural question: is there always some quantity, in problems of classical mechanics, that is minimized along physical motion? The answer is that there is indeed such a quantity, known as the action. We will now explain how to determine the equations of motion from the action, and then determine the form of the action that reproduces classical Newtonian physics.

Our basic tool will be the “Calculus of variations”, which we now describe.

2.1 Calculus of variations

Let us start by reviewing how to find the maxima and minima of a function \(f(s)\colon \mathbb{R}\to\mathbb{R}\). As you will recall, this can be done by solving the equation \[\frac{df}{ds} = 0\] as a function of \(s\). As an example, if our function is \(f(s)=\frac{1}{2}s^2-s\) we have \[\frac{df}{ds} = s-1\] so the function has an extremum (a minimum, in this case) at \(s=1\). An alternative way of formulating the same condition makes use of the definition of derivative as encoding the change in the function under small changes in \(s\). For a small \(\delta s\in\mathbb{R}\), we have \[f(s+\delta s) = f(s) + \frac{df(s)}{ds}\delta s + R(s, \delta s)\] where \(R(s, \delta s)\) is an error term. It is convenient to introduce the notation \[\delta f :=f(s+\delta s) - f(s)\] so the statement above becomes \[\delta f = \frac{df(s)}{ds}\delta s + R(s, \delta s)\, .\] We note that the usual definition of the derivative implies \[\lim_{\delta s\to 0} \frac{R(s, \delta s)}{\delta s} = 0\, .\] In these cases we say that “\(\delta f\) vanishes to first order in \(\delta s\)”. The functions that we will study will almost always admit a well-behaved Taylor expansion, so this result implies that \(R(s, \delta s)\) is at least of quadratic order in \(\delta s\). Henceforth we will encode this vanishing to first order by writing \(\mathcal{O}((\delta s)^2)\) instead of \(R(s, \delta s)\).

So, finally, we can say that the extrema of \(f(s)\) are located at the points where \[\delta f = \mathcal{O}((\delta s)^2)\, .\]

The same reasoning can be applied in the case of functions of multiple variables. Consider a function \(f(s_1, \ldots, s_N)\colon \mathbb{R}^N\to \mathbb{R}\), and introduce a small displacement \(s_i\to s_i+\delta s_i\). In this case the partial derivatives \(\partial f/\partial s_i\) are defined by \[\delta f = \sum_{i=1}^N \frac{\partial f}{\partial s_i}\delta s_i + \mathcal{O}(\delta s^2)\] where the error term includes terms vanishing faster than \(\delta s_i\) (so terms of the form \(\delta s_1^2\), \(\delta s_1\delta s_2,\ldots\)). Stationary points of \(f\) are located wherever \(\delta f\) vanishes to first order in \(\delta s_i\).

In fact, we need to go one step further, and work with functionals: these are maps from functions to \(\mathbb{R}\). One (heuristic, but often useful) way of thinking of them is as the limit of the previous multi-variate case when the number of variables \(N\) goes to infinity. From instance, we could have a functional \(S[y(t)]\) defined by \[S[y(t)] = \int_{a}^b y(t)^2 \, dt\] for some fixed choice of \((a,b)\). I emphasize that one should think of \(S\) as the analogue of \(f\) above, and the different functions \(y(t)\) as the “points” in the domain of this functional.

We want to define a meaning for a function \(y(t)\) to give an extremal value for the functional \(S\). In analogy with what happened in the finite dimensional case above, we can study the variation of \(S\) as we displace \(y(t)\) slightly. We need to be a bit careful when specifying which class of functions \(y(t)\) we are going to include in our extremization problem. In the case of interest to us, we will extremize over the set of smooth1 functions \(y(t)\) with fixed values at the endpoints \(a\) and \(b\). That is, we fix \(y(a)=y_a\) and \(y(b)=y_b\), for some fixed values of \(y_a\) and \(y_b\).

We say that a function \(y(t)\) is stationary (for the functional \(S\)) if \[\left.\frac{dS[y(t)+\epsilon z(t)]}{d\epsilon}\right|_{\epsilon=0}=0\] for all smooth \(z(t)\) such that \(z(a)=z(b)=0\).

This definition encodes the idea that the path \(y(t)\) extremises the action \(S\) in the space of paths starting at \(y_a\) and ending at \(y_b\). To see this, think of the function \(z(t)\) as an arbitrary choice of direction in the space of deformations. The condition is then saying that \(S[y(t)]\) is extremised along this direction. Since \(z(t)\) is arbitrary, we have that the condition is satisfied in every direction in function space. It might help to compare with how you could impose that an ordinary function \(f({\mathbf x})\) depending on a vector \(\mathbf x\) has an extremum at \(\mathbf{x}_0\). You could impose \[\left.\frac{df(\mathbf{x}_0 +\epsilon \mathbf{z})}{d\epsilon}\right|_{\epsilon = 0} = \left[\frac{d}{d\epsilon}\left(f(\mathbf{x}_0) + \epsilon \sum_{i} z_i \left.\frac{\partial f(\mathbf{x})}{\partial x_i}\right|_{\mathbf{x}=\mathbf{x}_0} + \mathcal{O}(\epsilon^2)\right)\right]_{\epsilon=0} = \sum_{i=1}^n z_i \left.\frac{\partial f(\mathbf{x})}{\partial x_i}\right|_{\mathbf{x}=\mathbf{x}_0} = 0\] for every choice of vector \(\mathbf{z}\). This is equivalent to imposing the familiar condition \[\left.\frac{\partial f(\mathbf{x})}{\partial x_i}\right|_{\mathbf{x}=\mathbf{x}_0} = 0\] for all \(i\), which ensures the existence of an extremum (or saddle point) at \(\mathbf{x}=\mathbf{x}_0\).

Consider the Taylor expansion in \(\epsilon\) (which is a constant) of \(S[y(t)+\epsilon z(t)]\): \[S[y(t)+\epsilon z(t)] = S[y(t)] + \epsilon \left.\frac{d S[y(t)+\epsilon z(t)]}{d \epsilon}\right|_{\epsilon=0} + \frac{1}{2}\epsilon^2 \left.\frac{d^2 S[y(t)+\epsilon z(t)]}{d\epsilon^2}\right|_{\epsilon=0} + \ldots\] The condition for \(y(t)\) to be stationary is that the term proportional to \(\epsilon\) vanishes: \[\delta S :=S[y(t)+\epsilon z(t)] - S[y(t)] = \mathcal{O}(\epsilon^2)\, .\]

It is useful to think of the combination \(\epsilon z(t)\) as a small variation of \(y(t)\), which we denote \(\delta y(t):=\epsilon z(t)\). We define \(\mathcal{O}((\delta y(t))^n)\) to mean simply \(\mathcal{O}(\epsilon^n)\). In particular, we can rewrite the stationary condition as \[\delta S = \mathcal{O}((\delta y(t))^2)\, .\]

If you are ever confused about the expansions in \(\delta y(t)\) below, you can replace \(\delta y(t)\) with \(\epsilon z(t)\), and expand in the constant \(\epsilon\). For instance, consider the integral \[\int g(t) (\delta y(t))^n dt\] For any positive integer \(n\) and any function \(g(t)\). I claim that this is \(\mathcal{O}((\delta y(t))^n)\). The proof is as follows: replacing \(\delta y(t)\) with \(\epsilon z(t)\) we have \[\int g(t) \epsilon^n z(t)^n dt = \epsilon^n \int g(t) z(t)^n dt\, .\] (Here we have used that \(\epsilon\) is a constant.) In our \(\mathcal{O}((\delta y(t)^n))\) notation, this means that \[\int \mathcal{O}((\delta y(t))^n) dt = \mathcal{O}((\delta y(t))^n)\, .\] That is, integration does not change the order in \(\delta y(t)\) (which, once more, when talking about action functionals I define to be really just the order in \(\epsilon\)).

Because our interest in dynamical problems, we will often refer to the functions \(y(t)\) as paths, so that the conditions above define what an “stationary path” is.

We are now in a position to introduce the action principle. Assume that we have an action functional (or simply action) \(S\colon \{\mathrm{functions}\}\to \mathbb{R}\), which takes functions, and generates a real number. In the Lagrangian formalism all of the physical content of the theory can be summarized in the choice of action functional.

For now we also assume that we have a particle moving in one dimension, and we want to determine its motion. Its trajectory is given by a function \(x(t)\), with \(t\) the time coordinate. For many physical problems equations of motion are second order in \(x(t)\), so we need data to fix two integration constants. In the Lagrangian formalism these are given by fixing the initial and final positions. That is, we will assume that we know the initial position \(x(t_0)\) of the particle at time \(t_0\), and its final position \(x(t_1)\) at time \(t_1\).

The action principle2 then states that for arbitrary smooth small deformations \(\delta x(t)\) around the “true” path \(x(t)\) (that is, the path that the particle will actually follow) we have \[\delta S :=S[x+\delta x] - S[x] = \mathcal{O}((\delta x)^2)\, .\]

Or in other words:

Action principle:

It is possible to choose an action functional \(S[x(t)]\) such that the paths described by

physical particles are stationary paths of \(S\).

In a moment we will need an important result known as the fundamental lemma of the calculus of variations. It goes as follows:

[Fundamental lemma of the calculus of variations] Consider a function \(f(x)\) continuous in the interval \([a,b]\) (we assume \(a<b\)) such that \[\int_a^b f(x) g(x) \, dx = 0\] for all smooth functions \(g(x)\) in \([a,b]\) such that \(g(a)=g(b)=0\). Then \(f(x)=0\) for all \(x\in (a,b)\).

I will prove the result by contradiction. Assume that there is a point \(p\in (a,b)\) such that \(f(p) > 0\) (the case \(f(p) <0\) can be proven analogously). By continuity of \(f(x)\), there will be a non-vanishing interval \([p_0,p_1]\) such that \(f(x)>\varepsilon\) for some \(\varepsilon > 0\). Construct \[g(x) = \begin{cases} \nu(x-p_0)\nu(p_1-x) & \text{if } x\in (p_0,p_1)\\ 0 & \text{otherwise.} \end{cases}\] with \(\nu(x)=\exp(-1/x)\). It is an interesting exercise in first year calculus to prove that this function is smooth everywhere, including at \(p_0\) and \(p_1\) (it is an example of “bump functions”, useful in many domains). Clearly \(f(x)g(x)>0\) for \(x\in(p_0,p_1)\), and vanishes otherwise. This implies that \[\int_a^b f(x) g(x)\, dx = \int_{p_0}^{p_1} f(x) g(x) \, dx\, > \, \varepsilon \int_{p_0}^{p_1} g(x)\, dx \, > \, 0\] which is in contradiction with the initial assumption \(\int_a^b f(x) g(x)\, dx = 0\).

Now, for the systems that we will study during this term, it will be the case that \(S\) can be expressed in a particularly nice way as the time integral of a Lagrangian. That is, we will have \[S[x] = \int_{t_0}^{t_1} \! dt \, L(x(t), \dot{x}(t))\] for some function \(L(a,b)\) of two real variables, where \(\dot{x}(t):=\frac{dx}{dt}\).

Whenever a Lagrangian exists, the variational principle together with the fundamental lemma of the calculus of variations leads to a set of differential equations that determine \(x(t)\). The argument is as follows. If we Taylor expand the perturbed Lagrangian to first order in \(\delta x(t)\) we get3 \[L(x(t)+\delta x(t), \dot{x}(t)+\delta \dot{x}(t)) = L(x(t),\dot{x}(t)) + \frac{\partial L}{\partial x}\delta x(t) + \frac{\partial L}{\partial\dot x} \delta \dot{x}(t) + \ldots\] Putting this expansion of the Lagrangian into the variation of the action we have \[\begin{equation} \begin{split} \delta S &= \int_{t_0}^{t_1} \! dt \, L(x(t)+\delta x(t), \dot{x}(t)+\delta \dot{x}(t)) - L(x(t), \dot{x}(t))\\ & = \int_{t_0}^{t_1}\! dt\, \left(\frac{\partial L}{\partial x}\delta x(t) + \frac{\partial L}{\partial \dot{x}}\delta \dot{x}(t)\right) \end{split} \label{eq:Lagrangian-variation} \end{equation}\] where we have omitted terms of second order or higher in \(\delta x\).4 For notational simplicity I will often write \(\partial L/\partial x\) instead of the more precise but much more cumbersome \[\frac{\partial L(r, s)}{\partial r}\biggr|_{(r,s)=(x(t),\dot{x}(t))}\] where \((r,s)\) are names for the two arguments of the Lagrangian \(L\) (which are conventionally, but somewhat confusingly, also named \(x\) and \(\dot{x}\), a convention that I will follow most of the time… but here I want to be as clear as possible about what I mean). Similarly \[\frac{\partial L}{\partial \dot{x}}:=\frac{\partial L(r, s)}{\partial s}\biggr|_{(r,s)=(x(t),\dot{x}(t))}\, .\]

We proceed by noting that \(\delta{\dot x(t)}=\frac{d}{dt}(\delta x(t))\), so we can write the above as \[\delta S = \int_{t_0}^{t_1}\! dt\, \left(\frac{\partial L}{\partial x}\delta x(t) + \frac{\partial L}{\partial \dot{x}}\frac{d}{dt}\delta x(t)\right)\, .\] Integration by parts of the second term5 now allows us to rewrite this as \[\delta S = \int_{t_0}^{t_1}\! dt\, \left[\left(\frac{\partial L}{\partial x} - \frac{d}{dt}\left(\frac{\partial L}{\partial \dot{x}}\right)\right)\delta x(t) + \frac{d}{dt}\left(\delta x(t)\frac{\partial L}{\partial \dot{x}}\right)\right]\, .\] The last term is a total derivative, so we can integrate it trivially to give: \[\delta S = \left[\delta x(t)\frac{\partial L}{\partial \dot{x}}\right]_{t_0}^{t_1} + \int_{t_0}^{t_1}\! dt\, \left(\frac{\partial L}{\partial x} - \frac{d}{dt}\left(\frac{\partial L}{\partial \dot{x}}\right)\right)\delta x(t)\, .\] Now we have that \(\delta x(t_0)=\delta x(t_1)=0\), as the paths that we consider all start and end on the same positions. This implies that the first term vanishes, so \[\delta S = \int_{t_0}^{t_1}\! dt\, \left(\frac{\partial L}{\partial x} - \frac{d}{dt}\left(\frac{\partial L}{\partial \dot{x}}\right)\right)\delta x(t)\, .\]

Recall that the action principle demands that this variation cancels (to first order in \(\delta x(t)\), i.e. ignoring possible terms that we have not written) for arbitrary \(\delta x(t)\). By the fundamental lemma of the calculus of variations, the only way that this could possibly be true is if the function multiplying \(\delta x(t)\) in the integral vanishes:

\[\frac{\partial L}{\partial x} - \frac{d}{dt}\left(\frac{\partial L}{\partial \dot{x}}\right) = 0\, .\]

This is known as the Euler-Lagrange equation, in the case of one-dimensional problems.

There is a somewhat subtle point in the Lagrangian formulation that I want to make explicit. Note that the Lagrangian \(L\) is an ordinary function of two parameters, and it knows nothing about paths. (In general it is a function of \(2N\) parameters, with \(N\) the number of “generalised coordinates” that we need to describe the system, see below.) Let me emphasize this by writing \(L(r,s)\). When using the Lagrangian function to construct the action we evaluated the Lagrangian function at \((r,s)=(x(t), \dot{x}(t))\) at each instant in time, but it is important to keep in mind that the Lagrangian itself treats \(r\) and \(s\) as independent variables: they are simply the two arguments to the function.

In general, if we want to study how this function changes under small displacements of \(r\) and \(s\) we would use the chain rule: \[L(r+\delta r, s+\delta s) = L(r,s) + \frac{\partial L}{\partial r}\delta r + \frac{\partial L}{\partial s}\delta s + \ldots\] where the dots denote terms of higher order in \(\delta r\) and \(\delta s\). This is what we did above in \(\eqref{eq:Lagrangian-variation}\), again with \((r,s)=(x(t), \dot{x}(t))\).

What this all means is that the partial derivatives appearing in the Euler-Lagrange equations treat the first and second arguments of the Lagrangian function independently, leading to the somewhat funny-looking rules: \[\begin{equation} \label{eq:Lagrangian-x-xdot-partials} \frac{\partial x}{\partial \dot{x}} = \frac{\partial \dot{x}}{\partial x} = 0\, . \end{equation}\] This would probably be a little clearer if we used a different notation for \(\dot{x}\) (such as \(v\)) when writing Lagrangians, to emphasize that in the Lagrangian formalism \(\dot{x}\) should be treated as a variable which is entirely independent of \(x\) itself. But I will stick to the standard (if somewhat puzzling at first) notation, with the understanding that in the Lagrangian formalism one should impose \(\eqref{eq:Lagrangian-x-xdot-partials}\).

This also makes clear that \(\eqref{eq:Lagrangian-x-xdot-partials}\) is not something you should generically expect to hold outside the Lagrangian formalism. And indeed, when we study the Hamiltonian framework below this rule will be replaced by a different one.

2.2 Configuration space and generalized coordinates

We now want to extend the Lagrangian formalism to deal with more general situations, beyond the rather special case of a particle moving in one dimension. We start with

The set of all possible (in principle) instantaneous configurations for a given physical system is known as configuration space. We will denote it by \(\mathcal{C}\).

It is important to note that this includes positions, but it does not include velocities. One informal way of thinking about configuration space is as the space of all distinct photographs one can take of the system, at least in principle.

Additionally, in constructing configuration space we make no statement about dynamics: we need to construct configuration space before we construct a Lagrangian, which tells us about the dynamics in this configuration space.

A particle moving in \(\mathbb{R}^d\) (that is, \(d\)-dimensional euclidean space) has configuration space \(\mathbb{R}^d\). We discussed the \(d=1\) example above, where we had a particle moving in the one dimensional line \(\mathbb{R}\), which we parametrized by the coordinate \(x\).

\(N\) particles moving freely in the \(\mathbb{R}^d\) have configuration space \((\mathbb{R}^{d})^N=\mathbb{R}^{dN}\). (Assuming that we can always distinguish the particles. I leave it as an amusing exercise to think what is the configuration space if you cannot distinguish the particles.)

\(N\) electrically charged particles moving in \(\mathbb{R}^d\): since particles are electrically charged they repel or attract. But this is a dynamical question, and configuration space is insensitive to such matters, it is still \(\mathbb{R}^{dN}\). One way to see this is that you can always place a set of \(N\) particles at any desired positions in \(\mathbb{R}^d\) (barring some singular points where particles overlap, so the system has infinite energy and is unphysical, but we can ignore such subtleties here). After being released, the particles will subsequently move in a manner described by the Euler-Lagrange equations, but any initial choice is permitted.

Two particles joined by a rigid rod of length \(\ell\) in \(d\)-dimensions: without the rod the configuration space is \(\mathbb{R}^{2d}\), but the rod introduces the constraint that the particles are at fixed distance \(\ell\) from each other. This can be written as \[||\vec{x}_1 - \vec{x}_2||^2 = \ell^2\] where \(\vec{x}_1\) and \(\vec{x}_2\) are the positions of the two particles in \(\mathbb{R}^d\). The configuration space is \(2d-1\) dimensional, given by the surface defined by this equation inside \(\mathbb{R}^{2d}\).

Finally, consider a rigid body in \(\mathbb{R}^3\), a desk for instance. We can view it as formed by \(~10^{27}\) atoms, joined by atomic forces. But for the purposes of classical mechanics we certainly do not care about the motion of the individual atoms (we are doing classical mechanics, not quantum mechanics, so we would get the answer wrong anyway, even if we could compute it!). Rather, for classical dynamics we can think about the classical configurations that the desk can take. And this is a six-dimensional space, given (for instance) by the position of the centre of mass of the desk, and three rotational angles.

Given a configuration space \(\mathcal{C}\) for a physical system \(\mathcal{S}\), we say that \(\mathcal{S}\) has \(\dim(\mathcal{C})\) degrees of freedom.

Although it is illuminating to think of configuration space abstractly, in practice we will often want to put coordinates on it, so that we can write and analyse concrete equations. I emphasize that this is a matter of convenience: any choice of coordinate system is equally valid, and the Lagrangian formalism holds regardless of the choice.

Given a configuration space \(\mathcal{C}\), any set of coordinates in this space is known as a set of generalized coordinates. Conventionally, when we want to indicate that some equation holds for arbitrary choices of generalized coordinates, we will use “\(q_i\)” for the coordinate names, with \(i\in\{1,\ldots,\dim(\mathcal{C})\}\), and “\(\mathbf{q}\)” (without indices) for the coordinate vector with components \(q_i\).

Consider the case of a particle moving on \(\mathbb{R}^2\). The configuration space is \(\mathbb{R}^2\). There are two natural sets of coordinates in this space (although I emphasize again that any choice is valid): we could choose the ordinary Cartesian coordinates \((x,y)\), or it might be more convenient to choose polar coordinates \(r,\theta\) satisfying \[\begin{split} x & = r\cos(\theta)\, ,\\ y & = r\sin(\theta)\, . \end{split}\]

Consider instead the case of a bead attached to a circular wire of unit radius on \(\mathbb{R}^2\), defined by the equation \(x^2+y^2=1\). The configuration space is the circle, \(S^1\). A possible coordinate in this space is the angular variable \(\theta\) appearing in the description of the circle in polar coordinates.

We will only be dealing with unconstrained generalized coordinates when describing configuration space. That is, we want a set of exactly \(\dim(\mathcal{C})\) coordinates (and no more) that describes, at least locally, the geometry of the configuration space \(\mathcal{C}\). So in example [ex:circle] we can take \(\theta\) as our generalized coordinate, but we do not want to consider \((x,y)\) as generalized coordinates, as they are subject to the constraint \(x^2+y^2=1\). While there is nothing wrong geometrically with such systems of coordinates, the existence of the constraint implies that we cannot vary \(x\) and \(y\) independently in our variational problem (as we we will implicitly do below), and this complicates the analysis of the problem somewhat. So, for simplicity, we will just declare that henceforth we are dealing with unconstrained systems of generalized coordinates.

We can now repeat the derivation of the Euler-Lagrange equations for a general configuration space \(\mathcal{C}\). Consider a general path in configuration space given by \(\mathbf{q}(t)\in\mathcal{C}\),6 and assume the existence of a Lagrangian function, \(L(\mathbf{q},\mathbf{\dot{q}})\), such that the action for the path is given by \[S= \int_{t_0}^{t_1}\! dt\, L(\mathbf{q}(t), \mathbf{\dot{q}}(t))\, .\] The variational principle states that, if we fix the initial and final positions in configuration space, that is \(\mathbf{q}(t_0)=\mathbf{q}^{(0)}\) and \(\mathbf{q}(t_1)=\mathbf{q}^{(1)}\), the path taken by the physical system satisfies \[\delta S = 0\] to first order in \(\delta \mathbf{q}(t)\). The derivation runs parallel to the one above (here \(N:=\dim(\mathcal{C})\)): \[\begin{split} \delta S & = \int_{t_0}^{t_1} \! dt \, \sum_{i=1}^N \frac{\partial L}{\partial q_i}\delta q_i + \sum_{i=1}^N \frac{\partial L}{\partial \dot{q}_i}\delta \dot{q}_i \\ & = \int_{t_0}^{t_1} \! dt \, \sum_{i=1}^N \frac{\partial L}{\partial q_i}\delta q_i + \sum_{i=1}^N \frac{\partial L}{\partial \dot{q}_i} \frac{d}{dt}(\delta {q}_i) \\ & = \int_{t_0}^{t_1} \! dt \, \sum_{i=1}^N \left(\frac{\partial L}{\partial q_i} - \frac{d}{dt}\left(\frac{\partial L}{\partial \dot{q}_i}\right)\right)\delta {q}_i + \frac{d}{dt}\left(\delta q_i \frac{\partial L}{\partial \dot{q}_i}\right) \\ & = \left[\sum_{i=1}^N\delta q_i \frac{\partial L}{\partial \dot{q}_i}\right]_{t_0}^{t_1} + \int_{t_0}^{t_1} \! dt \, \sum_{i=1}^N\left( \frac{\partial L}{\partial q_i} - \frac{d}{dt}\left(\frac{\partial L}{\partial \dot{q}_i}\right)\right)\delta {q}_i\, . \end{split}\] As mentioned above, we are dealing with unconstrained coordinates, meaning that we can vary the \(q_i\) independently in configuration space. Since there are \(\dim(\mathcal{C})\) independent coordinates, applying the fundamental lemma of the calculus of variations leads to the system of \(\dim(\mathcal{C})\) equations

\[\begin{equation} \label{eq:Euler-Lagrange} \frac{\partial L}{\partial q_i} - \frac{d}{dt}\left(\frac{\partial L}{\partial \dot{q}_i}\right) = 0 \qquad \forall i\in \{1,\ldots,\dim(\mathcal{C})\} \end{equation}\]

known as the Euler-Lagrange equations. I want to emphasize the fact that we have not made any assumptions about the specific choice of coordinate system used in deriving these equations, so the Euler-Lagrange equations are valid in any coordinate system.7

We emphasized in note [note:partial-derivative-subtlety] above that in the case of systems with one degree of freedom the Lagrangian is a function of the coordinate \(x\) (a coordinate in the one-dimensional configurations space) and \(\dot{x}\), and these should be treated as independent variables when writing down the Euler-Lagrange equations for the system.

Similarly, for \(N\)-dimensional configuration spaces, with generalized coordinates \(q_i\) with \(i\in\{1,\ldots,N\}\), we have in the Lagrangian formalism \[\frac{\partial q_i}{\partial \dot{q}_j} = \frac{\partial\dot{q}_i}{\partial q_j} = 0\ \] and \[\frac{\partial q_i}{\partial q_j} = \frac{\partial \dot{q}_i}{\partial \dot{q}_j} = \delta_{ij} = \begin{cases} 1 & \text{if } i=j\, ,\\ 0 & \text{otherwise.} \end{cases}\]

We will later on include the possibility of Lagrangians that depend on time explicitly. We indicate this as \(L(\mathbf{q},\mathbf{\dot{q}}, t)\), an example could be \(L=\frac{1}{2}m\dot{x}^2 - t^2x^2\).

This is a mild modification of the discussion above, and it does not affect the form of the Euler-Lagrange equations, but there are a couple of things to keep in mind:

When taking partial derivatives, \(t\) should be taken to be independent from \(\mathbf{q}\) and \(\mathbf{\dot{q}}\). The reasoning for this is as in note [note:partial-derivative-subtlety]: the Lagrangian is now a function of \(2\dim(\mathcal{C})+1\) arguments (the generalized coordinates, their velocities, and time), which are unrelated to each other. It is only when we use the Lagrangian to build the action that the parameters become related, but the partial derivatives that appear in the functional variation do not care about this, since they arise in computing the variation of the action under small changes in the path.

For instance, for \(L=\frac{1}{2}m\dot{x}^2 - \frac{1}{2}t^2x^2\) we have \[\frac{\partial L}{\partial \dot{x}} = m\dot{x}\qquad ; \qquad \frac{\partial L}{\partial x} = -xt^2 \qquad ; \qquad \frac{\partial L}{\partial t} = -tx^2\, .\]

Since in extremizing the action we change the path, but leave the time coordinate untouched, there is no Euler-Lagrange equation associated to \(t\). In the example above there would be a single Euler-Lagrange equation, of the form \[\frac{d}{dt}\left (\frac{\partial L}{\partial {\dot z }}\right )-\frac{\partial L}{\partial z} = m\ddot{z} + t^2z = 0\, .\]

2.3 Lagrangians for classical mechanics

So far we have kept \(L(\mathbf{q},\mathbf{\dot{q}})\) unspecified. How should we choose the Lagrangian in order to reproduce the classical equations of motion? Ultimately, this needs to be decided by experiment, but in problems in classical mechanics there is a very simple prescription. Consider a system with kinetic energy \(T(\mathbf{q},\mathbf{\dot{q}})\) and potential energy \(V(\mathbf{q})\). Then the Lagrangian that leads to the right equations of motion is

\[L = T - V\]

Let us see that this gives the right equations of motion in the simple case of a particle moving in three dimensions. The configuration space is \(\mathbb{R}^3\), and if we choose Cartesian coordinates \(x_i\) (that is, we choose \(q_i=x_i\)) we have \[T = \frac{1}{2}m(\dot{x}_1^2 + \dot{x}_2^2 + \dot{x}_3^2)\] and \(V=V(x_1,x_2,x_3)\). Note, in particular, that \(T\) depends only on \(\dot{x}_i\), and \(V\) depends on \(x_i\) only. We have three degrees of freedom, so we have three Euler-Lagrange equations, given by \[\begin{split} 0 & = \frac{\partial L}{\partial x_i} - \frac{d}{dt}\left(\frac{\partial L}{\partial \dot{x}_i}\right) \\ & = -\frac{\partial V}{\partial x_i} - m\frac{d}{dt}(\dot{x}_i) \\ & = -\frac{\partial V}{\partial x_i} - m\ddot{x}_i \end{split}\] where we have used that \(\frac{\partial V}{\partial \dot{x}_i}=0\) and \(\frac{\partial T}{\partial x_i}=0\), since \(x_i\) and \(\dot{x}_i\) are independent variables in the Lagrangian formalism, as we explained above. We can rewrite the equations above in vector notation as \[m\frac{d^2}{dt^2}(\vec{x}) = -\vec{\nabla} V\] which is precisely Newton’s second law for a conservative force \(\vec{F}=-\vec{\nabla} V\).

The simplest example of the discussion so far is the free particle of mass \(m\) moving in \(d\) dimensions. Its configuration space is \(\mathbb{R}^d\), which we can parametrize using Cartesian coordinates \(x_i\). In these coordinates the kinetic energy is given by \[T = \frac{1}{2}m\sum_{i=1}^d \dot{x}_i^2\] and the potential energy \(V\) vanishes. This gives a Lagrangian \[L = T - V = \frac{1}{2}m\sum_{i=1}^d \dot{x}_i^2\] which leads to the \(d\) Euler-Lagrange equations of motion \[m\ddot{x}_i = 0 \quad \forall i\in\{1,\ldots,d\}\, .\] These equations are solved by the particle moving at constant speed, \(x_i=v_i t+b_i\), with \(v_i, b_i\) constants.

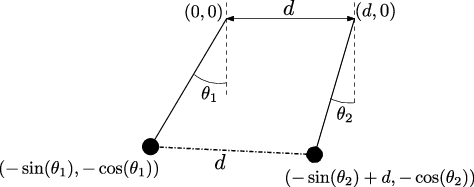

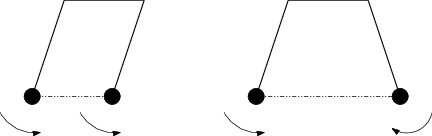

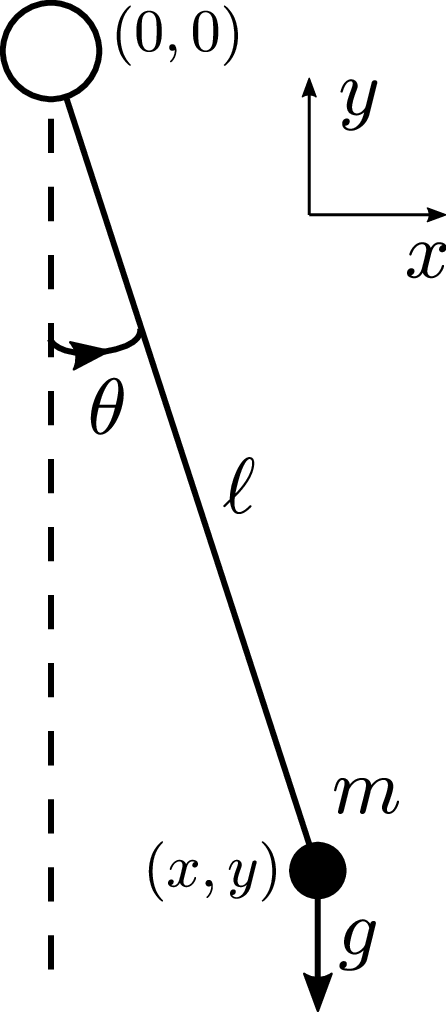

Our second example will be a pendulum moving under the influence of gravity. Our conventions will be as in figure 1: we have a mass \(m\) attached by a rigid massless rod of length \(\ell\) to a fixed point at the origin. The pendulum can swing on the \((x,y)\) plane. The configuration space of the system is \(S^1\). We choose as a coordinate the angle \(\theta\) of the rod with the downward vertical axis from the origin, measured counterclockwise. The whole system is affected by gravity, which acts downwards.

We now need to compute the kinetic and potential energy in terms of \(\theta\). The expression of the kinetic energy in the \((x,y)\) coordinates is \(\frac{1}{2}m(\dot{x}^2 + \dot{y}^2)\). In terms of \(\theta\) we have \[x = \ell \sin(\theta)\qquad \text{and}\qquad y = -\ell \cos(\theta)\, .\] This implies \(\dot{x}=\ell\cos(\theta)\dot{\theta}\) and \(\dot{y}=\ell\sin(\theta)\dot{\theta}\), so \[T = \frac{1}{2}m \ell^2 \dot{\theta}^2\,.\] The potential energy, in turn, is (up to an irrelevant additive constant) given by \[V = mgy = -mg\ell\cos(\theta)\] leading to the Lagrangian \[L = T-V = \frac{1}{2}m\ell^2\dot{\theta}^2 + mg\ell\cos(\theta)\, .\] The corresponding Euler-Lagrange equations are \[m\ell^2 \ddot{\theta} + mg\ell\sin(\theta) = 0\] or equivalently \[\ddot{\theta} + \frac{g}{\ell}\sin(\theta) = 0\, .\] The exact solution of this system requires using something known as elliptic integrals, but as a simple check of our solution, note that for small angles \(\sin(\theta)\approx \theta\), and the Euler-Lagrange equation reduces to \[\ddot{\theta} + \frac{g}{\ell}\theta = 0\] with solution \(\theta(t)=a\sin(\omega t) + b\cos(\omega t)\), where \(\omega=\sqrt{g/\ell}\), and \(a,b\) are arbitrary constants that encode initial conditions. These are the simple oscillatory solutions that one expects close to \(\theta=0\).

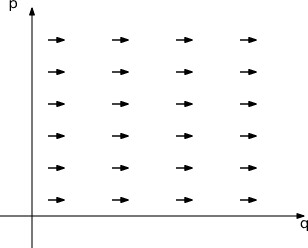

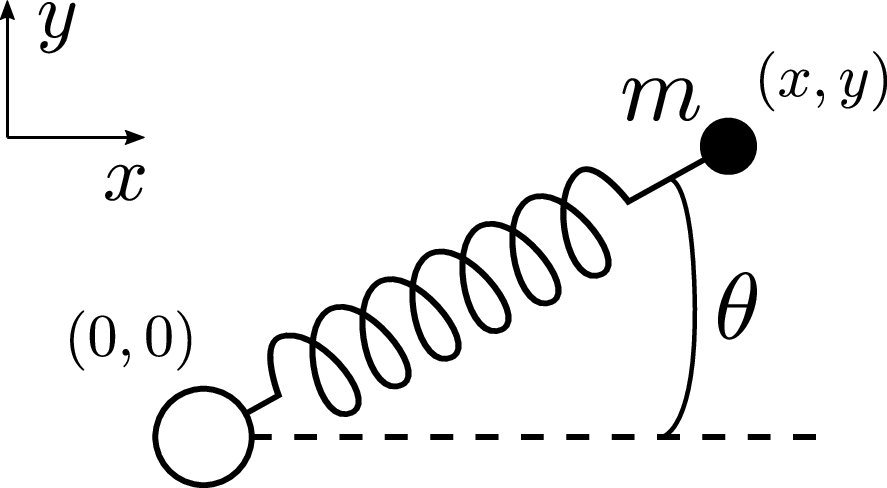

Consider instead a spring with a mass attached to it. The spring is attached on one end to the origin, but it is otherwise free to rotate on the \((x,y)\) plane, without friction. In this case we ignore the effect of gravity, and we assume that the spring has vanishing natural length, and constant \(\kappa\). The configuration is shown in figure 2.

In this case the configuration space is \(\mathbb{R}^2\). It is easiest to solve the Euler-Lagrange equations in Cartesian coordinates. We have the kinetic energy \[T = \frac{1}{2}m(\dot{x}^2 + \dot{y}^2)\, .\] The potential energy is given by the square of the extension of the spring, times the spring constant. We are assuming that the natural length of the spring is 0, so we have that the extension of the spring in \(\ell = \sqrt{x^2+y^2}\). So the potential energy is \[V = \frac{1}{2}\kappa \ell^2 = \frac{1}{2}\kappa (x^2+y^2) \, .\] Putting everything together, we find that \[L = T-V = \frac{1}{2}m (\dot{x}^2+\dot{y}^2) - \frac{1}{2}\kappa (x^2+ y^2)\, .\] The Euler-Lagrange equations split into independent equations for \(x\) and \(y\), given by \[\begin{split} \ddot{x} + \frac{\kappa}{m}x &= 0\, ,\\ \ddot{y} + \frac{\kappa}{m}y & =0\, . \end{split}\] The general solution is then simply \[\begin{split} x(t)&=a_x\sin(\omega t) + b_x\cos(\omega t)\, ,\\ y(t)&=a_y\sin(\omega t) + b_y\cos(\omega t)\, , \end{split}\] with \(a_x,a_y,b_x,b_y\) constants encoding the initial conditions, and \(\omega=\sqrt{\kappa/m}\).

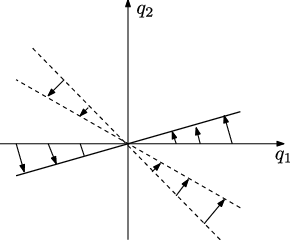

Let us try to solve this last example in polar coordinates \(r,\theta\). These are related to Cartesian coordinates by \[\begin{split} x & = r\cos(\theta)\, ,\\ y & = r\sin(\theta)\, . \end{split}\] Taking time derivatives, and using the Chain Rule for time derivatives, we find \[\begin{split} \dot{x} & = \dot{r}\cos(\theta) - r\sin(\theta)\dot{\theta}\, ,\\ \dot{y} & = \dot{r}\sin(\theta) + r\cos(\theta)\dot{\theta}\, . \end{split}\] A little bit of algebra then shows that \[T = \frac{1}{2}m(\dot{x}^2 + \dot{y}^2) = \frac{1}{2}m(\dot r^2 + r^2\dot{\theta}^2)\, .\] On the other hand, the potential energy is simpler. We have \[V = \frac{1}{2}\kappa (x^2+y^2) = \frac{1}{2}\kappa r^2\, .\] We thus find that the Lagrangian in polar coordinates is \[L = T-V = \frac{1}{2}m(\dot r^2 + r^2\dot{\theta}^2) - \frac{1}{2}\kappa r^2\, .\] Let us write the Euler-Lagrange equations. For the coordinate \(r\) we have \[\frac{d}{dt}\left (\frac{\partial L}{\partial {\dot r }}\right )-\frac{\partial L}{\partial r} = m\ddot{r} - mr\dot{\theta}^2 + \kappa r = 0\] while for the \(\theta\) coordinate we have \[\frac{d}{dt}\left (\frac{\partial L}{\partial {\dot \theta }}\right )-\frac{\partial L}{\partial \theta} = \frac{d}{dt}\left(mr^2\dot{\theta}\right) = 0\, .\] This equation is quite remarkable: it tells us that there is a conserved quantity in this system, given by \(mr^2\dot{\theta}\). This was not obvious at all in the Cartesian formulation of the problem,8 but it follows immediately in polar coordinates, since the Lagrangian does not depend on \(\theta\), only on \(\dot{\theta}\), and accordingly \(\partial L/\partial \theta=0\). We can use this knowledge to simplify the problem. Define \[J :=mr^2\dot{\theta}\, .\] This is a constant of motion, so on any given classical trajectory it is simply a real number fixed by initial conditions. We can use this knowledge to simplify the Euler-Lagrange equation for \(r\), which after replacing \(\dot{\theta}=J/mr^2\) becomes an equation purely in terms of \(r\): \[m\ddot{r} - mr\left(\frac{J}{mr^2}\right)^2 + \kappa r = m\ddot{r} - \frac{J^2}{mr^3} + \kappa r = 0\, .\]

2.4 Ignorable coordinates and conservation of generalised momenta

It is useful to formalize what we just saw happen in example [ex:rotating-spring].

Given a set \(\{q_1,\ldots,q_N\}\) of generalized coordinates, we say that a specific coordinate \(q_i\) in the set is ignorable if the Lagrangian function, expressed in these generalised coordinates, does not depend on \(q_i\). That is, a coordinate is ignorable iff \[\frac{\partial L(q_1,\ldots,q_N,\dot{q}_1,\ldots,\dot{q}_N)}{\partial q_i} = 0\, .\]

The generalized momentum \(p_i\) associated to a generalized coordinate is \[p_i:=\frac{\partial L}{\partial \dot{q}_i}\, .\]

With these two definitions in place we have

The generalized momentum associated to an ignorable coordinate is conserved.

This follows immediately from the Euler-Lagrange equation for the ignorable coordinate. Denoting the ignorable coordinate \(q_i\) and its associated generalized momentum \(p_i\), we have \[\frac{d}{dt}\left (\frac{\partial L}{\partial {\dot q_i }}\right )-\frac{\partial L}{\partial q_i} = \frac{dp_i}{dt} - 0 = \frac{dp_i}{dt} = 0\, .\]

We already found a ignorable coordinate in example [ex:rotating-spring]. We have that \(\theta\) was ignorable, and it associated generalized momentum is \[p_\theta = \frac{\partial L}{\partial\dot{\theta}} = mr^2\dot{\theta} \, .\]

An even simpler example is the free particle moving in \(d\) dimensions. In Cartesian coordinates we have \[L = T-V = \frac{1}{2} m \sum_{i=1}^d \dot{x}_i^2\, ,\] so every coordinate is ignorable. The associated generalized momenta are \[p_i = \frac{\partial L}{\partial \dot{x}_i} = m\dot{x}_i\, .\] In this case conservation of generalized momenta is simply conservation of linear momentum.

Let us look again to the free particle, but this time in two dimensions (\(d=2\)), and in polar coordinates. We have \[L = T - V = \frac{1}{2}m(\dot{r}^2 + r^2\dot{\theta}^2)\, .\] We have that \(\theta\) is ignorable. The associated conserved generalized momentum is \[p_\theta = \frac{\partial L}{\partial \dot{\theta}} = mr^2\dot{\theta}\, .\] You might recognize this as the angular momentum of the particle (that is, linear momentum \(\times\) position vector), which should indeed be conserved for the free particle.