$$ \newcommand{\pr}[1]{\mathbb{P}\left(#1\right)} \newcommand{\cpr}[2]{\mathbb{P}\left(#1\mid\,#2\right)} \newcommand{\expec}[1]{\mathbb{E}\left[#1\right]} \newcommand{\var}[1]{\text{Var}\left(#1\right)} \newcommand{\sd}[1]{\sigma\left(#1\right)} \newcommand{\cov}[1]{\text{Cov}\left(#1\right)} \newcommand{\cexpec}[2]{\mathbb{E}\left[#1 \vert#2 \right]} $$

1 Axioms of probability

In this chapter, we lay the foundations of probability calculus, and establish the main techniques for practical calculations with probabilities. The mathematical theory of probability is based on axioms, like Euclidean geometry. In classical geometry, the fundamental objects posited by the axioms are points and lines; in probability, they are events and their probabilities. The language and apparatus of set theory is used to express these concepts and to work with them.

There is a lot of ambiguity inherent in probability, because we are often using mathematical approaches to describe real-world scenarios. In some cases, there are several different ways to represent the real-world scenario as a probabilistic model, and the choices we make could affect our conclusions. In others, an unambiguous mathematical setup could have different real-world interpretations, depending on how we view it. Either way, once we have a probabilistic model, the axioms help us to ensure that the maths remains the same.

The axioms and properties of probability we develop in this chapter lay the foundations for all the rest of the theory we will build later in the course.

1.1 Sets

One of the key tools we need in this chapter is a good understanding of set theory. You’ll see all of this much more formally in Analysis, but in this section we give a quick rundown of the essentials we need for Probability.

In essence, a set is an unordered collection of distinguishable objects; these objects can be numbers, functions, other sets, and so on—any mathematical object can belong to a set.

The formal notation for a set is an opening curly bracket, followed by a list of elements that belong to the set, followed by a closing curly bracket. For instance, the set containing the elements \(2\), \(4\), and \(5\) is denoted by \[ \{2,4,5\}. \] Because the ordering of the elements is irrelevant, \(\{2,4,5\}\) and \(\{4,5,2\}\) denote the same set.

A set is often denoted by a capital letter such as \(A\), \(B\), \(C\), and so on.

For instance, \(\{2,4,5\}\subseteq\{1,2,3,4,5\}\). Note that for every set \(A\), we have \(A \subseteq A\) and \(\emptyset\subseteq A\). We can also use strict subsets, when the subset is not equal to the larger set: \(\{2,4,5\} \subset \{1,2,3,4,5\}\).

For example, the power set of the set \(A=\{1,2,3\}\) is \[ 2^A=\{\emptyset,\{1\},\{2\},\{3\},\{1,2\},\{1,3\},\{2,3\},\{1,2,3\}\}. \]

The notation \(2^A\) alludes to the size of the power set. When \(A\) is a finite set, its power set contains \(2^{|A|}\) subsets. This can be proved by constructing a bijection from \(2^A\) to ordered \(|A|\)-tuples of \(0\)s and \(1\)s, where a \(1\) indicates that the corresponding element of \(A\) is in the subset.

1.2 Sample space and events

For instance, suppose we roll a standard six-sided die.

The most obvious sample space is \(\Omega = \{1,2,3,4,5,6\}\), but if one was interested only in whether the die was odd or even, or a six or not, one could use \(\Omega = \{\text{odd},\text{even}\}\), or \(\Omega = \{\text{not a }6, 6\}\).

Often, like in the above example, we may enumerate the elements of the sample space \(\Omega\) in a finite or infinite list \(\Omega = \{ \omega_1, \omega_2, \ldots \}\), in which case we say the set \(\Omega\) is countable or discrete.

A set is said to be countable when its elements can be enumerated in a (possibly infinite) sequence. Every finite set is countable, and so is the set of natural numbers \(\mathbb{N} := \{1,2,3,\ldots \}\). The set of integers \(\mathbb{Z}\) is countable as well. The set of real numbers \(\mathbb{R}\) is not countable, and neither is any interval \([a,b]\) when \(a<b\).

One can prove that the set of rational numbers \(\mathbb{Q}\) is countable.

When we perform an experiment we are interested in the occurence, or otherwise, of events. An event is just a collection of possible outcomes, i.e., a subset of \(\Omega\).

If \(\Omega\) is discrete, we can always take \(\mathcal{F} = 2^\Omega\), so that every subset of \(\Omega\) is an event. If \(\Omega\) is not discrete, we need to be a little more careful: see Section 1.4 below.

The empty set \(\emptyset\) represents the impossible event, i.e., it will never occur. The sample space \(\Omega\) represents the certain event, i.e., it will always occur. Most interesting events are somewhere in between.

The representation of an event as a set obviously depends on the choice of sample space \(\Omega\) for the specific scenario under study, as shown by the following two examples.

1.3 Event calculus

Once we’ve defined our sample space and the set of all possible events, we need to be able to refer to combinations of events. To do so, we use standard notation from set theory.

Notice that:

- the complement of \(A^\mathrm{c}\) is \(A\): \((A^\mathrm{c})^\mathrm{c}=A\);

- there are no outcomes in both \(A\) and \(A^\mathrm{c}\): \(A \cap A^\mathrm{c} = \emptyset\);

- and every outcome is in one or the other: \(A \cup A^\mathrm{c} = \Omega\).

As with sums (\(\sum\)) and products (\(\Pi\)) of multiple numbers, we also have shorthands for unions and intersections of multiple sets: \[ \bigcup_{i=1}^n A_i:= A_1\cup A_2\cup\dots\cup A_n \] is the event that at least one of \(A_1, A_2, \dots A_n\) occurs (or the set of all \(\omega \in \Omega\) which are contained in at least one of the \(A_i\)s), and \[ \bigcap_{i=1}^n A_i:= A_1\cap A_2\cap\dots\cap A_n \] is the event that all of \(A_1, A_2, \dots A_n\) occur (or the set of all \(\omega \in \Omega\) which are in every \(A_i\)).

Occasionally, we will also need to take infinite unions and intersections over sequences of sets: \[\begin{align*} \bigcup_{i=1}^\infty A_i&:= A_1\cup A_2\cup A_3\cup\dots \\ \bigcap_{i=1}^\infty A_i&:= A_1\cap A_2\cap A_3\cap\dots. \end{align*}\]

We will also sometimes need De Morgan’s Laws: for a (possibly infinite) collection of events \(A_i\),

- The complement of the union is the intersection of the complements: \(\left( \bigcup_{i} A_i \right)^\mathrm{c} = \bigcap_i A_i^\mathrm{c}\), and

- The complement of the intersection is the union of the complements: \(\left( \bigcap_{i} A_i \right)^\mathrm{c} = \bigcup_i A_i^\mathrm{c}\).

These could be more intuitive than they appear: the negation of “some of these things happened” is “none of these things happened”, and the negation of “all of these things happened” is “some of these things did not happen”.

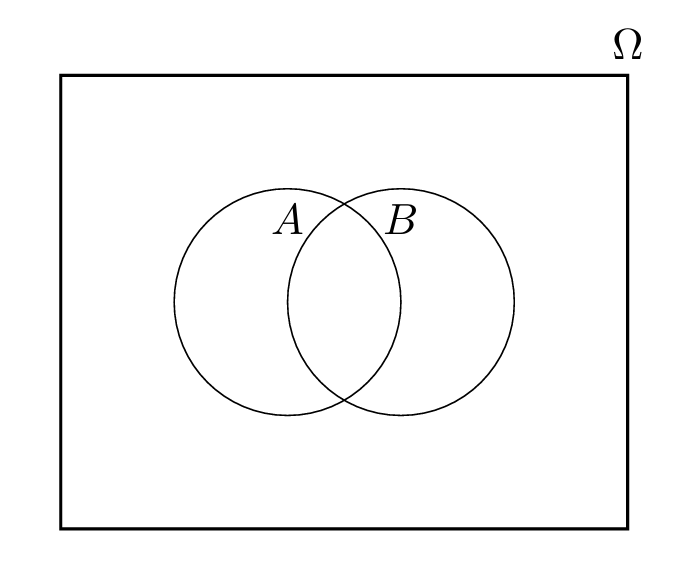

It is often useful to visualize the sample space in a Venn diagram. Then events such as \(A\) are subsets of the sample space. It is a helpful analogy to imagine the probability of an event as the area in the Venn diagram.

1.4 Sigma-algebras

In the last section we described some of the ways in which events can be combined. Now we can set out the rules for our collection of events, \(\mathcal{F}\), to ensure that it’s possible to use these different combinations.

We said that in the case where \(\Omega\) is discrete, one can take \(\mathcal{F} = 2^\Omega\).

In general, if \(\Omega\) is uncountable, it is too much to demand that probabilities should be defined on all subsets of \(\Omega\). The reason why this is a problem goes beyond the scope of this course (see the Bibliographical notes at the end of this chapter for references), but the essence is that for uncountable sample spaces, such as \(\Omega=[0,1]\), there exist subsets of \(\Omega\) that cannot be assigned a probability in a way that is consistent. The construction of such non-measurable sets is also the basis of the famous Banach–Tarski paradox.

Uncountable \(\Omega\) are unavoidable: we will see an infinite coin-tossing space at the end of section Section 1.6, and other examples occur whenever we have an experiment whose outcome is modelled by a continuous distribution such as the normal distribution (more on this later).

The upshot of all this is that we can, in general, only demand that probabilities are defined for all events in some collection \(\mathcal{F}\) of subsets of \(\Omega\) (i.e., for some \(\mathcal{F} \subseteq 2^\Omega\)). What properties should the collection \(\mathcal{F}\) of events possess? Consideration of the set operations in the previous section suggests the following definition.

Property S2 says that \(\mathcal{F}\) is closed under complementation, while S3 says that \(\mathcal{F}\) is closed under countable unions.

We can combine S1 and S2 to see that we must have \(\emptyset \in \mathcal{F}\). Also note that, we can get to a finite-union version of S3 by taking \(A_{n+1} = A_{n+2} = \cdots = \emptyset\): so \(\mathcal{F}\) is also closed under finite unions.

1.5 The axioms of probability

We will see shortly that a consequence of these axioms is that the probabilities \(\mathbb{P}(A)\) must lie between \(0\) and \(1\): \(0 \leq \mathbb{P}(A) \leq 1\).

We can upgrade (A3) to a slightly more technical version:

(A4) For any infinite sequence \(A_1,A_2,\dots\) of pairwise disjoint events (so \(A_i \cap A_j = \emptyset\) for all \(i \neq j\)), \[ \mathbb{P}\left( \bigcup_{i=1}^{\infty}A_i \right) = \sum_{i=1}^{\infty}\mathbb{P}(A_i). \]

For the axioms to make sense, we can’t just use any old event set \(\mathcal{F}\). For one thing, we need \(\Omega \in \mathcal{F}\); in fact all the events in (A1-4) need to be in \(\mathcal{F}\). Our definition of a \(\sigma\)-algebra from the previous section gives us exactly the event set we need.

As a concrete example, for tossing a fair die we would have \(\Omega = \{ 1,2,\ldots,6\}\), and \(\mathbb{P}(A) = |A|/6\) so, for example, \[ \mathbb{P}(\text{score is odd})=\mathbb{P}(\{1,3,5\})= \frac{3}{6} = \frac{1}{2}. \] We examine this setting in detail in Chapter 2.

For this course, we will usually assume that the probability distribution is given (and satisfies the axioms), without worrying too much about how the important practical task of finding the probabilities was carried out.

1.6 Consequences of the axioms

A host of useful results can be derived from A1–4.

Just one more consequence to go! Before we get there, we need the following simple but extremely useful idea: partitions.

For example, consider the sample space \(\Omega=\{1,2,3,4,5,6\}\). Some partitions are: \[\begin{align*} \{1\}, \{2\}, \{3\}, \{4\}, \{5\}, \{6\} \\ \{1, 2\}, \{3, 4\}, \{5, 6\} \\ \{1, 2, 3\}, \{4, 5, 6\} \\ \{1\}, \{2, 3\}, \{4, 5, 6\} \\ \{1, 2, 3, 4, 5, 6\} \\ \end{align*}\] and so on.

These consequences have an enormous effect on the way we work with probability. In particular, it turns out that we can solve most problems without ever having to explicitly write down the outcomes in our sample space, as in the next example. In fact, some people do probability without even defining a sample space.

Finite sample spaces are a great way to build up our intuition for probability calculations. However, it is surprisingly easy to end up in a situation where things start to get complicated.

1.7 Historical context

Sets are important not only for probability theory, but for all of mathematics. In fact, all of standard mathematics can be formulated in terms of set theory, under the assumption that sets satisfy the ZFC axioms; see for instance this Wikipedia page.

The foundations of probability have a long and interesting history (Hacking 2006; Todhunter 2014). The classical theory owes much to Pierre-Simon Laplace (1749–1827): see (Laplace 1825). However, a rigorous mathematical foundation for the theory was lacking, and was posed as part of one of David Hilbert’s (1862–1943) famous list of problems in 1900 (the 5th problem). After important work by Henri Lebesgue (1875–1941) and 'Emile Borel (1871–1956), it was Andrey Kolmogorov who succeeded in 1933 in providing the axioms that we use today (see the 1950 edition of his book (Kolmogorov 1950)). This approach declares that probabilities are measures.

A measure \(\mu\) can be defined on any set \(\Omega\) with a \(\sigma\)-algebra of subsets \(\mathcal{F}\), and the defining axioms are versions of A1 and A4. The special property of a probability measure is just that \(\mu(\Omega) = 1\). Measure theory is the theory that gives mathematical foundation to the concepts of length, area, and volume. For example, on \(\mathbb{R}\) the unique measure that has \(\mu (a,b) = b-a\) for intervals \((a,b)\) is the Lebesgue measure.

George Boole (1815–1864) and John Venn (1834–1923) both wrote books concerned with probability theory (Boole 1854), (Venn 1888); both were working before the formulation of Kolmogorov’s axioms.

As mentioned in Section 1.4, it is necessary in the general theory of probability to restricting events to some \(\sigma\)-algebra. The reason for this is that in standard ZFC set theory, when \(\Omega\) is uncountable (such as \(\Omega=[0,1]\) the unit interval), it follows from an argument by Vitali (1905) that many natural probability assessments, such as the continuous uniform distribution, cannot be modelled by a probability defined on all subsets of \(\Omega\) satisfying A1–4: see for instance Chapter 1 of (Rosenthal 2007). In the case where \(\Omega\) is countable, one can always define \(\mathbb{P}\) on the whole of \(2^\Omega\). In the case where \(\Omega\) is uncountable, we usually do not explicitly mention \(\Omega\) at all (when we work with continuous random variables, for example).

The formulation of the infinite coin-tossing experiment in Section 1.6 leads to the connection between coin tossing and the Lebesgue measure, as first described by Hugo Steinhaus in a 1923 paper.

An alternative approach to probability theory is to do away with axiom A4, in which case some of these technical issues can be avoided, at the expense of certain pathologies; however, in the standard approach to modern probability, based on measure theory, A4 is a central part of the theory.